Advancing Azure service high quality with synthetic intelligence: AIOps

“Within the period of huge knowledge, insights collected from cloud providers working on the scale of Azure rapidly exceed the eye span of people. It’s important to determine the fitting steps to take care of the best attainable high quality of service based mostly on the big quantity of information collected. In making use of this to Azure, we envision infusing AI into our cloud platform and DevOps course of, changing into AIOps, to allow the Azure platform to develop into extra self-adaptive, resilient, and environment friendly. AIOps may also help our engineers to take the fitting actions extra successfully and in a well timed method to proceed bettering service high quality and delighting our clients and companions. This submit continues our Advancing Reliability collection highlighting initiatives underway to maintain bettering the reliability of the Azure platform. The submit that follows was written by Jian Zhang, our Program Supervisor overseeing these efforts, as she shares our imaginative and prescient for AIOps, and highlights areas of this AI infusion which might be already a actuality as a part of our end-to-end cloud service administration.”—Mark Russinovich, CTO, Azure

This submit contains contributions from Principal Information Scientist Supervisor Yingnong Dang and Companion Group Software program Engineering Supervisor Murali Chintalapati.

As Mark talked about when he launched this Advancing Reliability weblog collection, constructing and working a world cloud infrastructure on the scale of Azure is a posh activity with a whole bunch of ever-evolving service elements, spanning greater than 160 datacenters and throughout greater than 60 areas. To rise to this problem, we’ve created an AIOps group to collaborate broadly throughout Azure engineering groups and partnered with Microsoft Analysis to develop AI options to make cloud service administration extra environment friendly and extra dependable than ever earlier than. We’re going to share our imaginative and prescient on the significance of infusing AI into our cloud platform and DevOps course of. Gartner referred to one thing comparable as AIOps (pronounced “AI Ops”) and this has develop into the widespread time period that we use internally, albeit with a bigger scope. At present’s submit is simply the beginning, as we intend to offer common updates to share our adoption tales of utilizing AI applied sciences to help how we construct and function Azure at scale.

Why AIOps?

There are two distinctive traits of cloud providers:

- The ever-increasing scale and complexity of the cloud platform and methods

- The ever-changing wants of consumers, companions, and their workloads

To construct and function dependable cloud providers throughout this fixed state of flux, and to take action as effectively and successfully as attainable, our cloud engineers (together with 1000’s of Azure builders, operations engineers, buyer help engineers, and program managers) closely depend on knowledge to make selections and take actions. Moreover, many of those selections and actions must be executed robotically as an integral a part of our cloud providers or our DevOps processes. Streamlining the trail from knowledge to selections to actions entails figuring out patterns within the knowledge, reasoning, and making predictions based mostly on historic knowledge, then recommending and even taking actions based mostly on the insights derived from all that underlying knowledge.

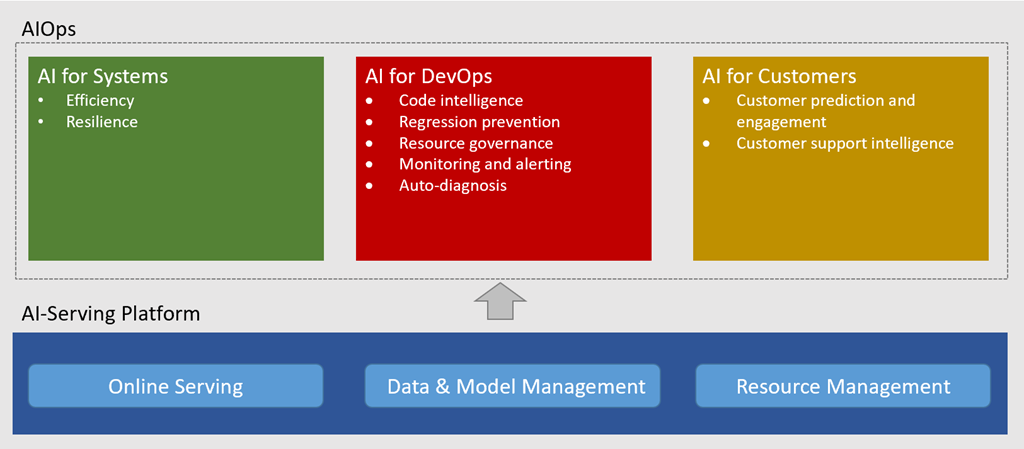

Determine 1. Infusing AI into cloud platform and DevOps.

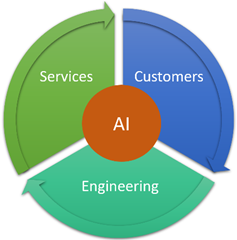

The AIOps imaginative and prescient

AIOps has began to rework the cloud enterprise by bettering service high quality and buyer expertise at scale whereas boosting engineers’ productiveness with clever instruments, driving steady value optimization, and finally bettering the reliability, efficiency, and effectivity of the platform itself. After we spend money on advancing AIOps and associated applied sciences, we see this finally supplies worth in a number of methods:

- Greater service high quality and effectivity: Cloud providers could have built-in capabilities of self-monitoring, self-adapting, and self-healing, all with minimal human intervention. Platform-level automation powered by such intelligence will enhance service high quality (together with reliability, and availability, and efficiency), and repair effectivity to ship the very best buyer expertise.

- Greater DevOps productiveness: With the automation energy of AI and ML, engineers are launched from the toil of investigating repeated points, manually working and supporting their providers, and might as a substitute concentrate on fixing new issues, constructing new performance, and work that extra straight impacts the client and associate expertise. In follow, AIOps empowers builders and engineers with insights to keep away from uncooked knowledge, thereby bettering engineer productiveness.

- Greater buyer satisfaction: AIOps options play a important position in enabling clients to make use of, preserve, and troubleshoot their workloads on prime of our cloud providers as simply as attainable. We endeavor to make use of AIOps to know buyer wants higher, in some instances to determine potential ache factors and proactively attain out as wanted. Information-driven insights into buyer workload conduct may flag when Microsoft or the client must take motion to stop points or apply workarounds. Finally, the purpose is to enhance satisfaction by rapidly figuring out, mitigating, and fixing points.

My colleagues Marcus Fontoura, Murali Chintalapati, and Yingnong Dang shared Microsoft’s imaginative and prescient, investments, and pattern achievements on this house throughout the keynote AI for Cloud–Towards Clever Cloud Platforms and AIOps on the AAAI-20 Workshop on Cloud Intelligence at the side of the 34th AAAI Convention on Synthetic Intelligence. The imaginative and prescient was created by a Microsoft AIOps committee throughout cloud service product teams together with Azure, Microsoft 365, Bing, and LinkedIn, in addition to Microsoft Analysis (MSR). Within the keynote, we shared a couple of key areas by which AIOps might be transformative for constructing and working cloud methods, as proven within the chart beneath.

Determine 2. AI for Cloud: AIOps and AI-Serving Platform.

AIOps

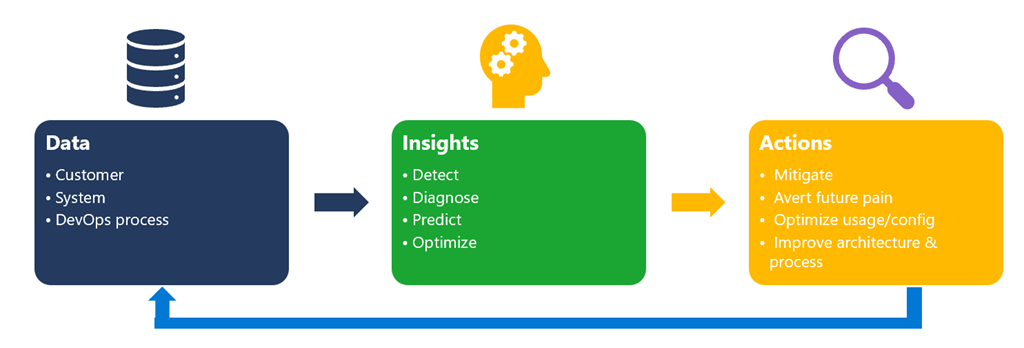

Transferring past our imaginative and prescient, we wished to begin by briefly summarizing our basic methodology for constructing AIOps options. An answer on this house at all times begins with knowledge—measurements of methods, clients, and processes—as the important thing of any AIOps answer is distilling insights about system conduct, buyer behaviors, and DevOps artifacts and processes. The insights may embody figuring out an issue that’s taking place now (detect), why it’s taking place (diagnose), what is going to occur sooner or later (predict), and easy methods to enhance (optimize, regulate, and mitigate). Such insights ought to at all times be related to enterprise metrics—buyer satisfaction, system high quality, and DevOps productiveness—and drive actions in keeping with prioritization decided by the enterprise impression. The actions may also be fed again into the system and course of. This suggestions could possibly be totally automated (infused into the system) or with people within the loop (infused into the DevOps course of). This total methodology guided us to construct AIOps options in three pillars.

Determine 3. AIOps methodologies: Information, insights, and actions.

AI for methods

At present, we’re introducing a number of AIOps options which might be already in use and supporting Azure behind the scenes. The purpose is to automate system administration to cut back human intervention. Consequently, this helps to cut back operational prices, enhance system effectivity, and improve buyer satisfaction. These options have already contributed considerably to the Azure platform availability enhancements, particularly for Azure IaaS digital machines (VMs). AIOps options contributed in a number of methods together with defending clients’ workload from host failures via {hardware} failure prediction and proactive actions like dwell migration and Venture Tardigrade and pre-provisioning VMs to shorten VM creation time.

In fact, engineering enhancements and ongoing system innovation additionally play vital roles within the steady enchancment of platform reliability.

- {Hardware} Failure Prediction is to guard cloud clients from interruptions attributable to {hardware} failures. We shared our story of Enhancing Azure Digital Machine resiliency with predictive ML and dwell migration again in 2018. Microsoft Analysis and Azure have constructed a disk failure prediction answer for Azure Compute, triggering the dwell migration of buyer VMs from predicted-to-fail nodes to wholesome nodes. We additionally expanded the prediction to different kinds of {hardware} points together with reminiscence and networking router failures. This allows us to carry out predictive upkeep for higher availability.

- Pre-Provisioning Service in Azure brings VM deployment reliability and latency advantages by creating pre-provisioned VMs. Pre-provisioned VMs are pre-created and partially configured VMs forward of buyer requests for VMs. As we described within the IJCAI 2020 publication, As we described within the AAAI-20 keynote talked about above, the Pre-Provisioning Service leverages a prediction engine to foretell VM configurations and the variety of VMs per configuration to pre-create. This prediction engine applies dynamic fashions which might be skilled based mostly on historic and present deployment behaviors and predicts future deployments. Pre-Provisioning Service makes use of this prediction to create and handle VM swimming pools per VM configuration. Pre-Provisioning Service resizes the pool of VMs by destroying or including VMs as prescribed by the most recent predictions. As soon as a VM matching the client’s request is recognized, the VM is assigned from the pre-created pool to the client’s subscription.

AI for DevOps

AI can increase engineering productiveness and assist in delivery high-quality providers with pace. Under are a couple of examples of AI for DevOps options.

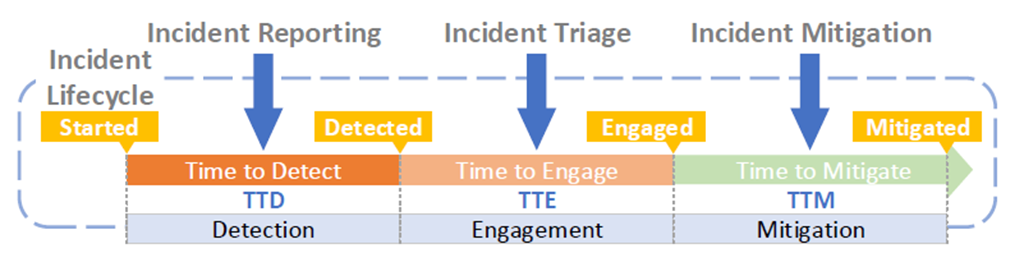

- Incident administration is a crucial side of cloud service administration—figuring out and mitigating uncommon however inevitable platform outages. A typical incident administration process consists of a number of phases together with detection, engagement, and mitigation phases. Time spent in every stage is used as a Key Efficiency Indicator (KPI) to measure and drive speedy concern decision. KPIs embody time to detect (TTD), time to have interaction (TTE), and time to mitigate (TTM).

Determine 4. Incident administration procedures.

As shared in AIOps Improvements in Incident Administration for Cloud Companies on the AAAI-20 convention, we’ve developed AI-based options that allow engineers not solely to detect points early but in addition to determine the fitting group(s) to have interaction and due to this fact mitigate as rapidly as attainable. Tight integration into the platform allows end-to-end touchless mitigation for some situations, which significantly reduces buyer impression and due to this fact improves the general buyer expertise.

- Anomaly Detection supplies an end-to-end monitoring and anomaly detection answer for Azure IaaS. The detection answer targets a broad spectrum of anomaly patterns that features not solely generic patterns outlined by thresholds, but in addition patterns that are sometimes tougher to detect similar to leaking patterns (for instance, reminiscence leaks) and rising patterns (not a spike, however rising with fluctuations over a long run). Insights generated by the anomaly detection options are injected into the present Azure DevOps platform and processes, for instance, alerting via the telemetry platform, incident administration platform, and, in some instances, triggering automated communications to impacted clients. This helps us detect points as early as attainable.

For an instance that has already made its manner right into a customer-facing characteristic, Dynamic Threshold is an ML-based anomaly detection mannequin. It’s a characteristic of Azure Monitor used via the Azure portal or via the ARM API. Dynamic Threshold permits customers to tune their detection sensitivity, together with specifying what number of violation factors will set off a monitoring alert.

- Protected Deployment serves as an clever international “watchdog” for the secure rollout of Azure infrastructure elements. We constructed a system, code title Gandalf, that analyzes temporal and spatial correlation to seize latent points that occurred hours and even days after the rollout. This helps to determine suspicious rollouts (throughout a sea of ongoing rollouts), which is widespread for Azure situations, and helps stop the problem propagating and due to this fact prevents impression to further clients. We supplied particulars on our secure deployment practices in this earlier weblog submit and went into extra element about how Gandalf works in our USENIX NSDI 2020 paper and slide deck.

AI for purchasers

To enhance the Azure buyer expertise, we’ve been growing AI options to energy the total lifecycle of buyer administration. For instance, a call help system has been developed to information clients in the direction of the perfect number of help sources by leveraging the client’s service choice and verbatim abstract of the issue skilled. This helps shorten the time it takes to get clients and companions the fitting steerage and help that they want.

AI-serving platform

To attain higher efficiencies in managing a global-scale cloud, we’ve been investing in constructing methods that help utilizing AI to optimize cloud useful resource utilization and due to this fact the client expertise. One instance is Useful resource Central (RC), an AI-serving platform for Azure that we described in Communications of the ACM. It collects telemetry from Azure containers and servers, learns from their prior behaviors, and, when requested, produces predictions of their future behaviors. We’re already utilizing RC to foretell many traits of Azure Compute workloads precisely, together with useful resource procurement and allocation, all of which helps to enhance system efficiency and effectivity.

Wanting in the direction of the longer term

We now have shared our imaginative and prescient of AI infusion into the Azure platform and our DevOps processes and highlighted a number of options which might be already in use to enhance service high quality throughout a variety of areas. Look to us to share extra particulars of our inner AI and ML options for much more clever cloud administration sooner or later. We’re assured that these are the fitting funding options to enhance our effectiveness and effectivity as a cloud supplier, together with bettering the reliability and efficiency of the Azure platform itself.