The subsequent frontier in machine studying: driving accountable practices

Organizations all over the world are gearing up for a future powered by synthetic intelligence (AI). From provide chain methods to genomics, and from predictive upkeep to autonomous methods, each facet of the transformation is making use of AI. This raises an important query: How are we ensuring that the AI methods and fashions present the correct moral habits and ship outcomes that may be defined and backed with information?

This week at Spark + AI Summit, we talked about Microsoft’s dedication to the development of AI and machine studying pushed by ideas that put individuals first.

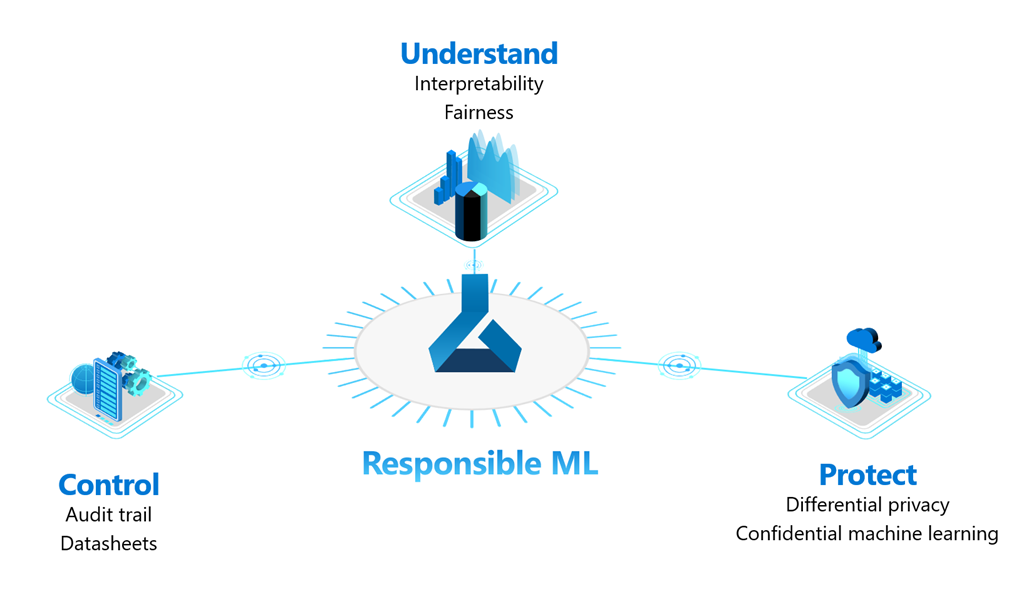

Perceive, defend, and management your machine studying resolution

Over the previous a number of years, machine studying has moved out of analysis labs and into the mainstream and has grown from a distinct segment self-discipline for information scientists with PhDs to at least one the place all builders are empowered to take part. With energy comes duty. Because the viewers for machine studying expands, practitioners are more and more requested to construct AI methods which might be straightforward to elucidate and that adjust to privateness rules.

To navigate these hurdles, we at Microsoft, in collaboration with the Aether Committee and its working teams, have made accessible our accountable machine studying (accountable ML) improvements that assist builders perceive, defend and management their fashions all through the machine studying lifecycle. These capabilities might be accessed in any Python-based surroundings and have been open sourced on GitHub to ask neighborhood contributions.

Understanding the mannequin habits contains with the ability to clarify and take away any unfairness inside the fashions. The interpretability and equity evaluation capabilities powered by the InterpretML and Fairlearn toolkits, respectively, allow this understanding. These toolkits assist decide mannequin habits, mitigate any unfairness, and enhance transparency inside the fashions.

Defending the information used to create fashions by making certain information privateness and confidentiality, is one other necessary facet of accountable ML. We’ve launched a differential privateness toolkit, developed in collaboration with researchers on the Harvard Institute for Quantitative Social Science and College of Engineering. The toolkit applies statistical noise to the information whereas sustaining an data price range. This ensures a person’s privateness whereas enabling the machine studying course of to run unhurt.

Controlling fashions and its metadata with options, like audit trails and datasheets, brings the accountable ML capabilities full circle. In Azure Machine Studying, auditing capabilities observe all actions all through the lifecycle of a machine studying mannequin. For compliance causes, organizations can leverage this audit path to hint how and why a mannequin’s predictions confirmed sure habits.

Many shoppers, equivalent to EY and Scandinavian Airways, use these capabilities in the present day to construct moral, compliant, clear, and reliable options whereas enhancing their buyer experiences.

Our continued dedication to open supply

Along with open sourcing our accountable ML toolkits, there are two extra initiatives we’re sharing with the neighborhood. The primary is Hyperspace, a brand new extensible indexing subsystem for Apache Spark. That is designed to work as a easy add-on, and comes with Scala, Python, and .Web assist. Hyperspace is identical know-how that powers the indexing engine inside Azure Synapse Analytics. In benchmarking in opposition to frequent workloads like TPC-H and TPC-DS, Hyperspace has offered positive factors of 2x and 1.8x, respectively. Hyperspace is now on GitHub. We sit up for seeing new concepts and contributions on Hyperspace to make Apache Spark’s efficiency even higher.

The second is a preview of ONNX Runtime’s assist for accelerated coaching. The newest launch of coaching acceleration incorporates improvements from the AI at Scale initiative, equivalent to ZeRO optimization and Mission Parasail, which improves reminiscence utilization and parallelism on GPUs.

We deeply worth our partnership with the open supply neighborhood and sit up for collaborating to ascertain accountable ML practices within the trade.

Further sources