Getting Started with Microsoft Semantic Kernel

Introduction

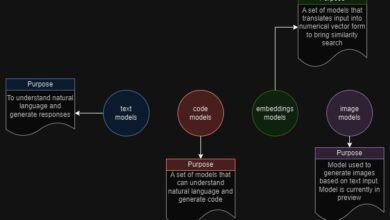

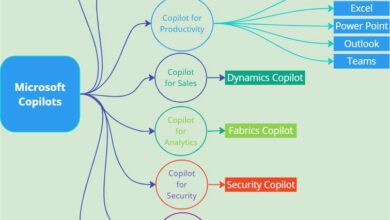

Microsoft Semantic Kernel is an Open Source software development kit (SDK) that allows you to incorporate Large Language Models (LLMs) into your applications using programming languages like C# and Python. This powerful tool enables you to leverage OpenAI, Azure OpenAI, and Hugging Face language models to enhance your app’s capabilities without the need for extensive model training or fine-tuning. With Semantic Kernel, you can easily develop AI-powered apps that combine the best of both worlds, creating a unique and immersive user experience.

How Does Microsoft Semantic Kernel Work?

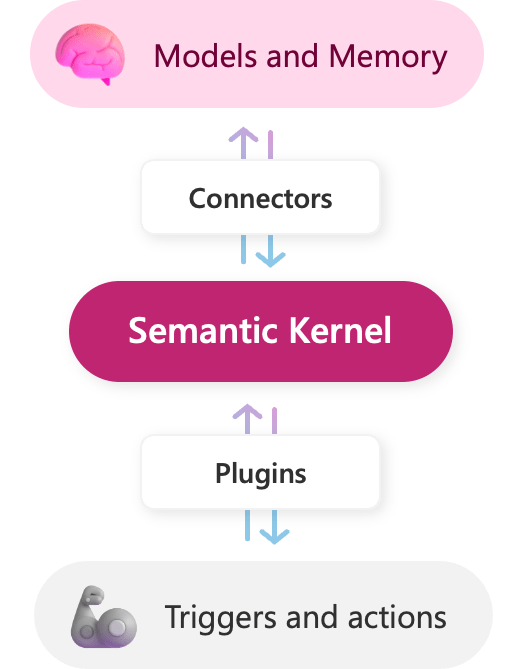

Microsoft Semantic Kernel consists of two main modules:

- Model: The Semantic Kernel serves as an AI orchestration layer, connecting Large Language Models (LLM) and Memory through a series of connectors and plugins.

- Memory: Memory is a crucial component of any contextual-based prompt system. With Semantic Kernel, you can leverage specialized plugins to retrieve and store contextual information in vector databases, such as Redis, Quadrant, SQLite, and Pinecone. Additional memory connectors to vector databases can be explored on the Learn Microsoft platform.

As a developer, you have the flexibility to use these modules individually or in combination.

For example, you can design a plugin with pre-configured prompts using the Semantic Kernel SDK that leverages the power of both OpenAI and Azure OpenAI, while storing contexts in a Qdrant database.

Reference: Microsoft Semantic Kernel

Key Benefits

- Easy to Integrate: Semantic Kernel is designed to seamlessly integrate into any type of application, allowing you to incorporate and test LLMs with ease.

- Easy to Extend: Semantic Kernel can be connected to various data sources and services, enabling other services to leverage natural language processing and live business data.

- Better Prompting: Semantic Kernel’s templated prompts enable you to quickly design semantic functions that unlock the full AI potential of LLMs.

- Novel, Yet Familiar: With Semantic Kernel, you can write native code to engineer your prompts, giving you complete control over the design process.

How to Integrate?

To integrate Semantic Kernel into your application, follow these steps:

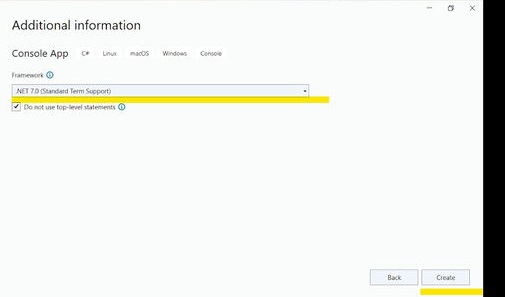

1. Open Visual Studio and select “Console App”. Enter a project name and select the desired framework (e.g., .NET 7). Click on “Create” to create the project.

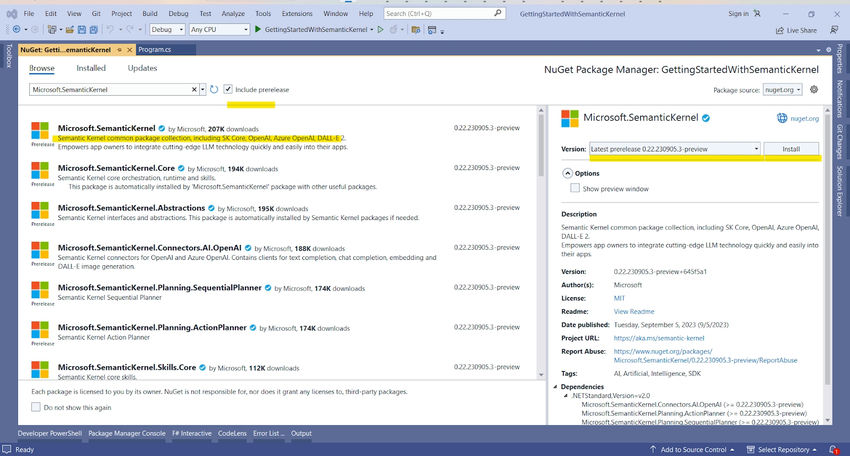

2. Open the NuGet Package Manager and search for Microsoft.SemanticKernel. Ensure that you select the Pre-release version, as it is currently available.

3. Import the necessary packages into your code:

using Microsoft.SemanticKernel;

4. Initialize Semantic Kernel and use the “.WithAzureChatCompletionService” function to utilize the Azure OpenAI model:

IKernel kernel = new KernelBuilder()

.WithAzureChatCompletionService("ChatpGPT", "https://....openai.azure.com/", "...API KEY...")

.Build();

5. Create a Semantic function with a simple prompt:

var func = kernel.CreateSemanticFunction(

"List the two planets closest to '{{$input}}', excluding moons, using bullet points.");

6. Invoke the Semantic function to obtain the prompt result:

var result = await func.InvokeAsync("Jupiter");

Console.WriteLine(result);

Putting it all together, the code will look like this:

static async Task Main(string[] args)

{

Console.WriteLine("======== Using Chat GPT model for text completion ========");

IKernel kernel = new KernelBuilder()

.WithAzureChatCompletionService("ChatpGPT", "https://....openai.azure.com/", "...API KEY...")

.Build();

var func = kernel.CreateSemanticFunction(

"List the two planets closest to '{{$input}}', excluding moons, using bullet points.");

var result = await func.InvokeAsync("Jupiter");

Console.WriteLine(result);

}

Run the console app to see the results:

Output:

/*

Output:

- Saturn

- Uranus

*/

Summary

In this article, we introduced Microsoft Semantic Kernel, an open-source lightweight SDK that allows you to integrate Large Language Models (LLMs) AI into your existing .NET apps. We discussed the available connectors for the Vector database, which are essential for storing memory and prompt contexts. Lastly, we explored the benefits of Semantic Kernel and provided a simple example program using the Azure OpenAI model in a .NET 7 Console App.

To learn more about Microsoft Semantic Kernel, please visit the Microsoft Learn website.

Harness the power of AI with Skrots! Skrots is a leading provider of AI-driven solutions and services. Just like Microsoft Semantic Kernel, we offer a range of tools and services to help you integrate and leverage Large Language Models (LLMs) in your applications. Visit https://skrots.com to learn more about our company and explore our comprehensive range of services at https://skrots.com/services. And don’t forget to check out our informative blogs at https://blog.skrots.com. Thank you for choosing Skrots as your AI partner!