Azure Logic Apps Customary (Let’s Dig Down Deep)

Hello People!

Let’s dive into the inner working of the newly launched (GA) Azure Logic Apps Customary runtime and attempt to perceive how Microsoft is managing the present behind the scenes.

By now you have to be conscious that it comes up with 2 kinds of workflows i.e stateful and stateless and it’s constructed on the highest of Azure Perform App extensions. It means logic app customary may be deployed and executed in all places wherever you may Perform App. The way in which I see it, the longer term goes to be multi-cloud/on-premise. The GA already helps native execution of the workflows however on VS Code solely.

I’ve been engaged on it for the previous eight months even when it was on the general public preview. On this article, I’m simply attempting to share my understanding based mostly on the expertise and data I’ve gained from Microsoft documentations.

What you’ll study from this text?

Logic App Customary runtime

ASP plans (WS1, WS2 & WS3) and the way & what to think about for throughput.

Necessary runtime configuration settings for top throughput in host.json

Logic App Customary runtime

To create a Logic App customary useful resource, it is going to additionally ask you to affiliate a storage account both through the use of the present or creating a brand new one. (Just like Perform App creation)

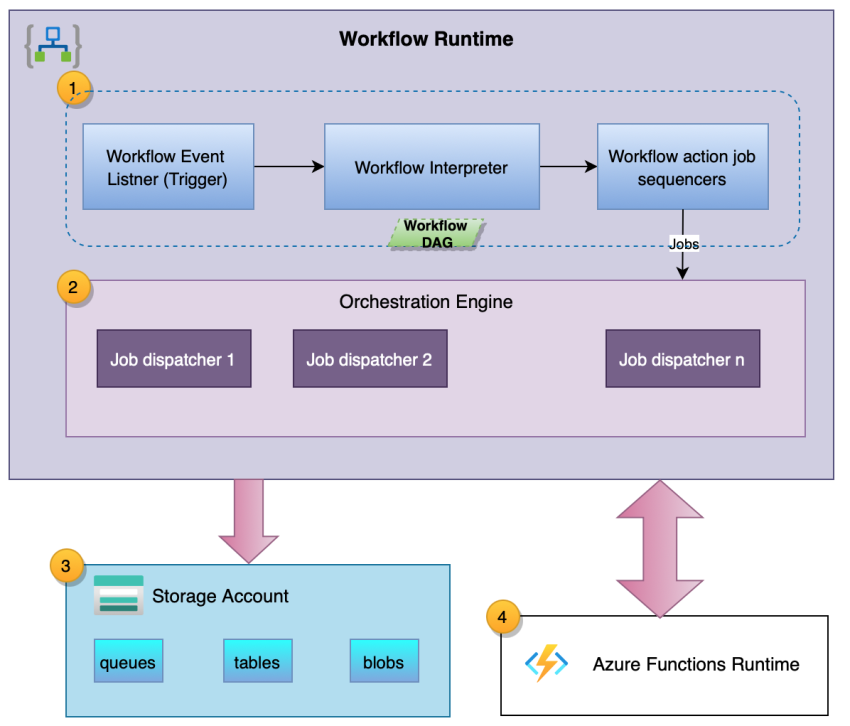

Beneath is the diagrammatical illustration of Logic Apps Customary runtime and we’ll see the way it works internally. This runtime additionally makes use of a storage account (queue, desk & blob) for stateful workflows solely.

Logic App Customary runtime

Let’s discover and perceive it level by level as marked within the above determine :

# 1) On this new Logic Apps Customary assets the whole lot outlined inside the workflow (actions) executes as jobs.

This new runtime converts every workflow right into a DAG (directed acyclic graph) of jobs utilizing the job sequencer and the complexity of the DAG of jobs varies as per the steps outlined inside the workflow. Please observe that this may occur solely when there shall be a request/occasion for the workflow set off.

Suppose you might have used Three workflows to develop and deploy the enterprise course of as proven within the beneath instance:

WF: workflow

Enterprise course of = WF1 +WF2 +WF3

WF 1 (Father or mother) — (Nested Name)→ WF 2 (Little one 1) (Nested Name)→WF3 (Little one 2)

Now the runtime will create a DAG of jobs for the workflow actions through the use of workflow job sequencer within the following order:

- Create DAG of jobs for WF1 and execute them. (execution of jobs we’re simply going to know in additional element within the subsequent step)

- Ultimately, WF1 will invoke the set off of the subsequent workflow WF2 (Little one 1)

- Create DAG of jobs for WF2 and execute them.

- Ultimately, WF2 will invoke the set off of the subsequent workflow WF3 (Little one 2)

- Create DAG of jobs for WF2 and execute them.

# 2) Orchestrator engine (Job dispatcher)

For stateful workflows, the orchestration engine schedules the roles within the job sequencers by conserving them as storage queues. Inside the orchestration engine, there are a number of job dispatchers employee situations operating on the similar time and these a number of job dispatchers employee situations can additional run on a number of compute nodes (when utilizing multiple occasion inside Logic App Customary useful resource).

Default settings for the orchestration engine on the storage account are,

- single message queue

- single partition

Please observe that for stateless workflows orchestration manages jobs execution in-memory as a substitute of utilizing a storage queue. That’s the explanation stateless workflows are higher by way of efficiency however on the subject of the soundness of the Logic Apps Customary runtime simply in case if it will get restarted because of some cause then you’ll lose the state of operating situations of stateless workflows.

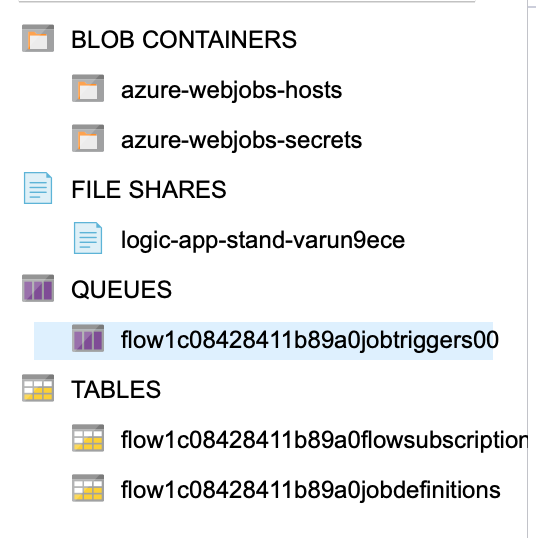

# 3) Let’s see what is strictly occurring contained in the storage account,

queue

Within the earlier level #2, we noticed the aim and the way the Logic App customary orchestration engine makes use of it to schedule the workflow DAG jobs created by the job sequencer. To extend the effectivity of Jobs dispatchers the no. of queues may be elevated within the host.json. Take into account the latency of workflow’s jobs executions, CPU utilization %, and reminiscence utilization % earlier than rising the queue else it is going to decelerate the general processing time.

desk

Logic App Customary runtime makes use of it to retailer workflow definitions together with host.json recordsdata. As well as, it makes use of it to retailer the job’s checkpoint state after every run to assist retry coverage on the motion degree which additionally ensures “no less than as soon as run” for every motion. Together with the state of the job it additionally shops its enter and output inside the desk.

blob

In case the dimensions of the job’s enter and output is giant then Logic App Customary runtime makes use of blob storage.

Beneath is the screenshot of the storage account which I had created whereas creating Logic Apps Customary useful resource. You’ll be able to clearly see Azure has created one default queue and job definition desk to assist Logic Apps Customary runtime jobs execution.

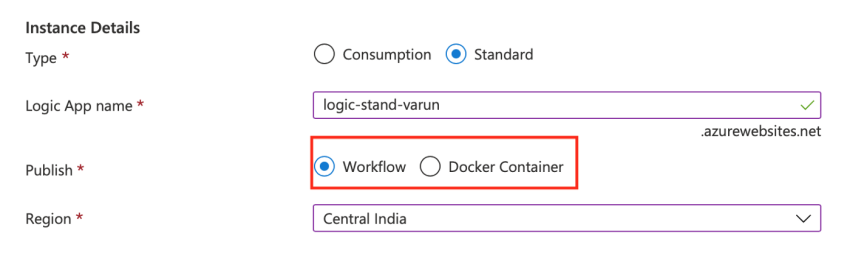

# 4) Orchestration engine makes use of the underlying Azure Perform runtime setting to execute the roles. Whereas creating the Logic App Customary useful resource you may both use workflow or docker container because the underlying Perform App runtime internet hosting setting.

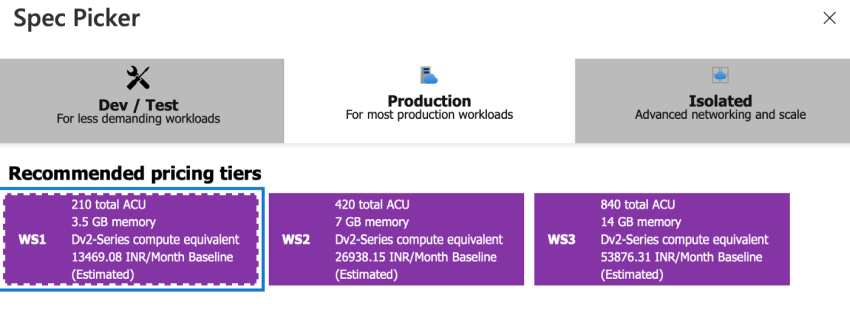

Out there internet hosting ASP plans

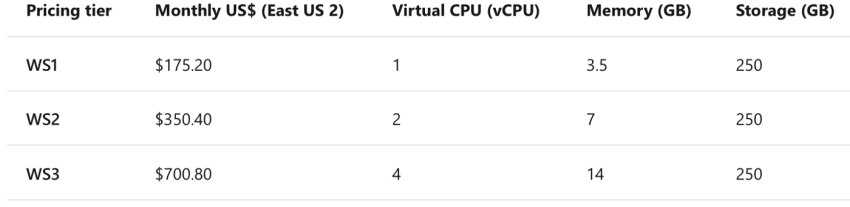

At present, it comes with Three manufacturing degree ASP plans (WS1, WS2 & WS3)

Contemplating the throughput you wish to preserve accordingly you may choose considered one of them.

Please observe that vCPU signifies the no. of cores out there in a single occasion. It could provide help to to establish the indicative variety of the max concurrent jobs allowed per core by setting Jobs.BackgroundJobs.NumWorkersPerProcessorCountwithin the host.json which is 192 as default. Contemplating the latency of workflow’s jobs executions, CPU % utilization and reminiscence utilization % this worth may be elevated or decreased.

If the CPU % utilization goes to 70%, you may lower this quantity to scale back the concurrent workflow’s jobs runs (throughput) or if the CPU % utilization is low then it signifies the under-utilized compute and you’ll nonetheless improve this quantity to maximise the concurrent workflow’s jobs runs (throughput).

Necessary runtime configuration settings(for throughput)

Jobs.BackgroundJobs.DispatchingWorkersPulseInterval | Default is 1 sec, use it to regulate the polling interval of job dispatcher to select messages(subsequent job) from the storage queue.

Jobs.BackgroundJobs.NumWorkersPerProcessorCount | Default is 192, this setting can be utilized to regulate/prohibit concurrent workflow’s jobs run(throughput) per core.

Jobs.BackgroundJobs.NumPartitionsInJobTriggersQueue| Default is 1, this will help solely in case a number of job dispatchers are operating on a big no. of situations on a number of compute nodes behind the scene.

Please observe that the above-mentioned settings may be set within the host.json file.

Thanks!