Convert Textual content to Audio utilizing Azure and .NET 8

Within the ever-evolving panorama of know-how, accessibility stays a vital focus. As builders, it is our accountability to make sure that our functions are usable by people of all skills.

Textual content-to-audio conversion, powered by Azure Cognitive Providers, presents a strong resolution to this problem. On this article, we delve into the method of integrating text-to-audio performance into

.NET functions, exploring its implementation, use circumstances, and the transformative influence it will possibly have on accessibility.

Use Circumstances and Functions

- Narrating Content material for Visually Impaired Customers: By integrating text-to-audio performance, functions can audibly convey web site content material, paperwork, or instructional supplies, thereby enhancing accessibility for visually impaired people.

- Interactive Voice Response Methods: Incorporating natural-sounding speech into IVR techniques enriches person expertise, providing intuitive navigation via menus, prompts, and suggestions mechanisms.

- Directions and Notifications: Textual content-to-audio conversion serves to alleviate cognitive pressure by audibly delivering directions, alerts, or notifications inside functions, thereby decreasing reliance on visible interplay.

- Language Studying Functions: Textual content-to-audio capabilities facilitate pronunciation steerage, textual content passage narration, and listening workout routines inside language studying functions, fostering enhanced language acquisition.

Setting Up Azure Cognitive Providers

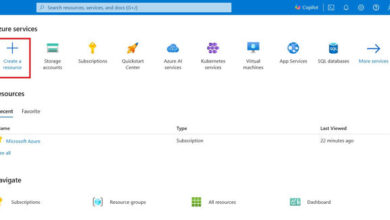

Step one is to create an Azure Cognitive Providers useful resource.

- Register for an Azure account if you have not already by signing up without cost right here.

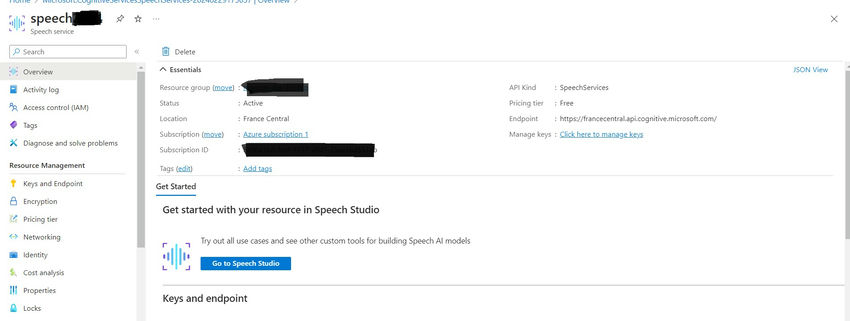

- Entry the Azure portal and set up a brand new Speech Service useful resource.

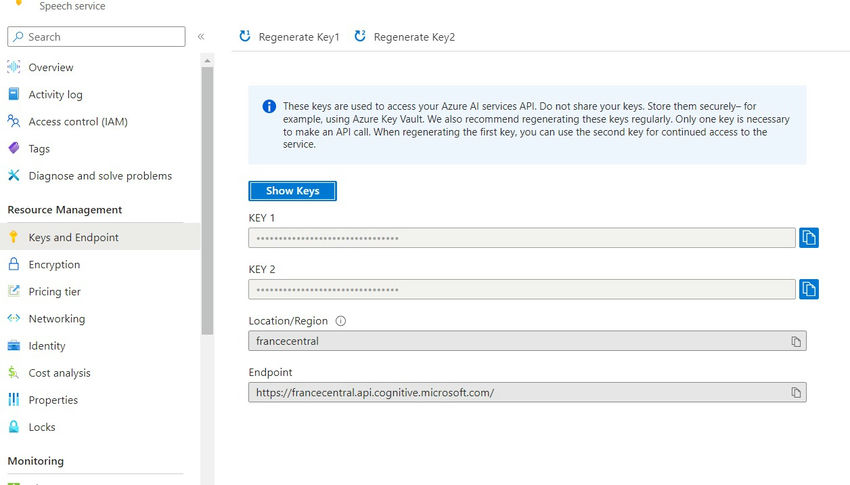

After the useful resource is ready, make an observation of Key 1 and the Location/Area from the ‘Keys and Endpoint’ tab of the useful resource. These particulars are crucial for linking your utility to the Textual content-to-Speech service.

Integrating Azure Speech service with .NET

Create a .NET 8 Console App venture in Visual Studio, after which set up Microsoft.CognitiveServices.Speech NuGet bundle.

Or

dotnet add bundle Microsoft.Azure.CognitiveServices.Speech

Pattern Code Snippet of program.cs

utilizing Microsoft.CognitiveServices.Speech;

utilizing System.Media;

namespace TextToSpeech.Azure.NET8

{

public class Program

{

public static async Job Most important()

{

attempt

{

await SynthesizeAndPlayAudioAsync();

}

catch (Exception ex)

{

Console.WriteLine($"An error occurred: {ex.Message}");

}

}

non-public static async Job SynthesizeAndPlayAudioAsync()

{

// Load configuration from safe storage or app settings

string key= "";

string area = "";

var speechConfig = SpeechConfig.FromSubscription(key, area);

Console.WriteLine("Enter the textual content to synthesize:");

string textual content = Console.ReadLine();

Console.WriteLine("Select a voice:");

Console.WriteLine("1. en-US-GuyNeural");

Console.WriteLine("2. en-US-JennyNeural");

Console.WriteLine("3. en-US-AriaNeural");

string voiceChoice = Console.ReadLine();

string voiceName;

swap (voiceChoice)

{

case "1":

voiceName = "en-US-GuyNeural";

break;

case "2":

voiceName = "en-US-JennyNeural";

break;

case "3":

voiceName = "en-US-AriaNeural";

break;

default:

voiceName = "en-US-GuyNeural"; // Default to GuyNeural

break;

}

speechConfig.SetProperty(PropertyId.SpeechServiceConnection_SynthVoice, voiceName);

utilizing var synthesizer = new SpeechSynthesizer(speechConfig);

utilizing var memoryStream = new MemoryStream();

synthesizer.SynthesisCompleted += (s, e) =>

{

if (e.Outcome.Motive == ResultReason.SynthesizingAudioCompleted)

{

memoryStream.Search(0, SeekOrigin.Start);

utilizing var participant = new SoundPlayer(memoryStream);

participant.PlaySync();

}

else

{

Console.WriteLine($"Speech synthesis failed: {e.Outcome.Motive}");

}

};

await synthesizer.SpeakTextAsync(textual content);

}

}

}

Exchange the key and area worth with your individual from ‘Keys and Endpoint’.

- Most important Technique: The entry level of this system the place asynchronous execution begins. It calls the SynthesizeAndPlayAudioAsync technique.

- SynthesizeAndPlayAudioAsync Technique: This technique handles the speech synthesis and audio playback logic asynchronously.

- It initializes the SpeechConfig object utilizing a subscription key and area.

- It prompts the person to enter the textual content to synthesize and choose a voice from a predefined record.

- Based mostly on the person’s voice selection, it units the suitable voice for synthesis.

- It creates a SpeechSynthesizer object with the configured SpeechConfig.

- It subscribes to the SynthesisCompleted occasion of the SpeechSynthesizer to deal with audio playback.

- When synthesis completes, it performs the synthesized audio utilizing a SoundPlayer.

- Exception Dealing with: This system catches and shows any exceptions that happen throughout execution.

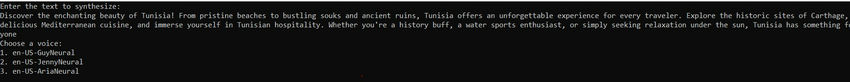

Testing the conversion

As soon as you’ve got constructed and launched the applying, it can convert the supplied textual content enter into speech. It is going to play the synthesized speech again instantly or put it aside to an audio file for later use.

Construct and Run the Utility, as soon as the applying is operating, it can convert the enter textual content to speech and both play it again or put it aside to an audio file.

The next sentence is transformed from textual content to speech

Uncover the enchanting great thing about Tunisia! From pristine seashores to bustling souks and historic ruins, Tunisia affords an unforgettable expertise for each traveler. Discover the historic websites of Carthage, savor scrumptious Mediterranean delicacies, and immerse your self in Tunisian hospitality. Whether or not you are a historical past buff, a water sports activities fanatic, or just looking for leisure underneath the solar, Tunisia has one thing for everybody

You’ll be able to view the end result on this video: https://vimeo.com/918035859

Conclusion

The mixing of Azure Cognitive Providers with .NET presents a robust resolution for changing textual content to audio seamlessly. By leveraging Azure’s sturdy infrastructure and. NET’s flexibility, builders can improve accessibility and enrich person expertise throughout a variety of functions.

This integration not solely facilitates accessibility for customers with visible impairments but in addition opens up new avenues for delivering content material in natural-sounding audio codecs, finally broadening the attain and influence of digital functions.

References: https://be taught.microsoft.com/en-us/azure/ai-services/speech-service/text-to-speech

Thanks for studying, please let me know your questions, ideas, or suggestions within the feedback part. I admire your suggestions and encouragement.

Pleased Documenting!

Know extra about our firm at Skrots. Know extra about our providers at Skrots Providers, Additionally checkout all different blogs at Weblog at Skrots