Scaling Azure Databricks Safe Community Entry to Azure Knowledge Lake Storage

Introduction

In as we speak’s data-driven panorama, organizations more and more depend on highly effective analytics platforms like Azure Databricks to derive insights from huge quantities of knowledge. Nonetheless, making certain safe community entry to knowledge storage is paramount. This text will delve into the intricacies of scaling Azure Databricks safe community entry to Azure Knowledge Lake Storage, providing a complete information together with sensible examples.

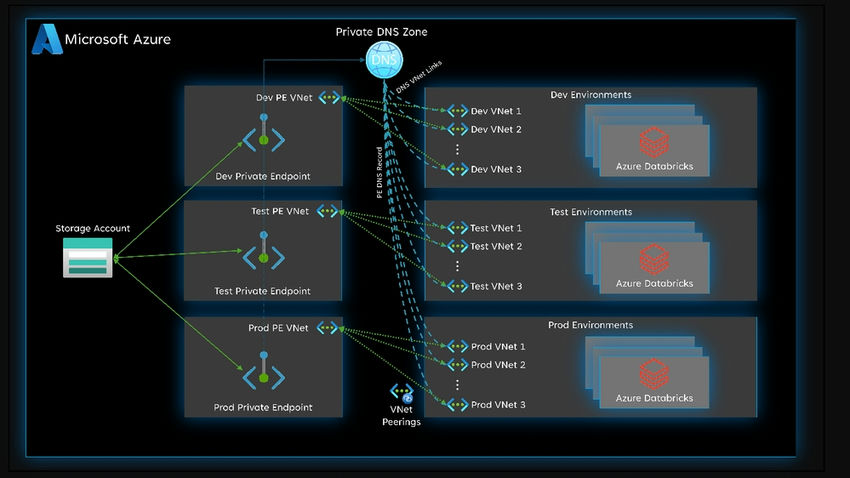

[Image © Microsoft Azure]

Understanding the Significance of Safe Community Entry

Earlier than we dive into the technical facets, it is essential to understand why safe community entry is important for Azure Databricks when interacting with Azure Knowledge Lake Storage. Knowledge breaches and unauthorized entry can have extreme penalties, starting from regulatory non-compliance to reputational harm. Establishing a strong safety framework ensures that delicate knowledge stays protected, sustaining belief and compliance.

Overview of Azure Databricks and Azure Knowledge Lake Storage

Let’s start by briefly outlining the important thing elements of Azure Databricks and Azure Knowledge Lake Storage.

-

Azure Databricks: Azure Databricks is a cloud-based analytics platform integrating with Microsoft Azure. It facilitates collaborative and scalable knowledge science and analytics by a collaborative workspace and highly effective computing assets.

-

Azure Knowledge Lake Storage: Azure Knowledge Lake Storage is a extremely scalable and safe knowledge lake resolution that permits organizations to retailer and analyze huge quantities of knowledge. It’s designed to deal with structured and unstructured knowledge, making it a super selection for giant knowledge analytics.

Making certain Safe Community Entry

Let’s discover the steps to scale safe community entry between Azure Databricks and Azure Knowledge Lake Storage.

Setting Up Digital Networks

- Start by creating digital networks for each Azure Databricks and Azure Knowledge Lake Storage.

- Configure the digital networks to permit communication between the 2 providers securely.

Instance

# Create a digital community for Azure Databricks

az community vnet create --resource-group MyResourceGroup --name DatabricksVNet --address-prefixes 10.0.0.0/16 --subnet-name DatabricksSubnet --subnet-prefixes 10.0.0.0/24

# Create a digital community for Azure Knowledge Lake Storage

az community vnet create --resource-group MyResourceGroup --name StorageVNet --address-prefixes 10.1.0.0/16 --subnet-name StorageSubnet --subnet-prefixes 10.1.0.0/24

Configuring Community Safety Teams (NSGs)

- Make the most of NSGs to manage inbound and outbound site visitors to and from the digital networks.

- Outline guidelines to permit particular communication between Azure Databricks and Azure Knowledge Lake Storage.

Instance

# Enable site visitors from Databricks subnet to Storage subnet

az community nsg rule create --resource-group MyResourceGroup --nsg-name DatabricksNSG --name AllowStorageAccess --priority 100 --source-address-prefixes 10.0.0.0/24 --destination-address-prefixes 10.1.0.0/24 --destination-port-ranges 443 --direction Outbound --access Enable --protocol Tcp

Non-public Hyperlink for Azure Knowledge Lake Storage

- Implement Azure Non-public Hyperlink to entry Azure Knowledge Lake Storage over a non-public community connection.

- This ensures that knowledge by no means traverses the general public web, enhancing safety.

Instance

# Create a non-public endpoint for Azure Knowledge Lake Storage

az community private-endpoint create --resource-group MyResourceGroup --name StoragePrivateEndpoint --vnet-name StorageVNet --subnet StorageSubnet --private-connection-resource-id $datalakeResourceId --connection-name StoragePrivateConnection --group-id blob

Finest Practices for Scaling Safe Community Entry

Scaling safe community entry entails extra than simply the preliminary setup. It requires ongoing administration and adherence to finest practices. Think about the next:

-

Common Audits and Monitoring

- Conduct common audits to make sure that safety configurations are up-to-date.

- Implement monitoring options to detect and reply to any suspicious actions promptly.

-

Position-Primarily based Entry Management (RBAC)

- Make the most of RBAC to outline and implement roles and permissions.

- Prohibit entry to the minimal essential to carry out particular duties, lowering the chance of unauthorized entry.

-

Encryption

- Allow encryption for knowledge at relaxation and in transit.

- Make the most of Azure Key Vault for managing encryption keys securely.

-

Automated Deployments

- Implement Infrastructure as Code (IaC) ideas to automate the deployment of safe community configurations.

- This ensures consistency and reduces the chance of human error.

Actual-world Situation: Safe Community Entry for a Machine Studying Undertaking

For example the sensible software of the ideas mentioned, let’s take into account a real-world situation involving a machine studying challenge on Azure Databricks.

Situation: A company is utilizing Azure Databricks to develop and deploy machine studying fashions. The coaching knowledge is saved in Azure Knowledge Lake Storage, and it is essential to make sure safe and environment friendly entry to the information.

Create Digital Networks and Subnets

- Arrange digital networks for Azure Databricks and Azure Knowledge Lake Storage, every with its devoted subnet.

- On this instance, let’s create digital networks and subnets for each Azure Databricks and Azure Knowledge Lake Storage utilizing Azure Command-Line Interface (Azure CLI) instructions. The instance assumes that you’ve already logged in to the Azure CLI and have the mandatory permissions.

Instance

# Variables

resourceGroup="YourResourceGroup"

databricksVnetName="DatabricksVNet"

databricksSubnetName="DatabricksSubnet"

databricksSubnetPrefix="10.0.0.0/24"

storageVnetName="StorageVNet"

storageSubnetName="StorageSubnet"

storageSubnetPrefix="10.1.0.0/24"

# Create digital community for Azure Databricks

az community vnet create

--resource-group $resourceGroup

--name $databricksVnetName

--address-prefixes $databricksSubnetPrefix

--subnet-name $databricksSubnetName

--subnet-prefixes $databricksSubnetPrefix

# Create digital community for Azure Knowledge Lake Storage

az community vnet create

--resource-group $resourceGroup

--name $storageVnetName

--address-prefixes $storageSubnetPrefix

--subnet-name $storageSubnetName

--subnet-prefixes $storageSubnetPrefix

Rationalization

-

Variables

- Substitute “YourResourceGroup” with the precise title of your Azure useful resource group.

- Outline names and handle prefixes for the digital networks and subnets.

-

Create a Digital Community for Azure Databricks

az community vnet create: Command to create a digital community.--resource-group: Specifies the Azure useful resource group.--name: Specifies the title of the digital community.--address-prefixes: Specifies the handle prefix for all the digital community.--subnet-name: Specifies the title of the subnet inside the digital community.--subnet-prefixes: Specifies the handle prefix for the subnet.

-

Create a Digital Community for Azure Knowledge Lake Storage

- Just like the Databricks digital community creation, this command creates a digital community for Azure Knowledge Lake Storage with its subnet.

Now, you might have two digital networks, every with its devoted subnet. You possibly can proceed to configure community safety teams, non-public hyperlinks, and different elements to boost the safety of the community communication between Azure Databricks and Azure Knowledge Lake Storage.

Configure NSGs

- Outline NSG guidelines to permit site visitors between the Databricks subnet and the Storage subnet.

- On this instance, we’ll configure Community Safety Teams (NSGs) to manage inbound and outbound site visitors between the digital networks and subnets for Azure Databricks and Azure Knowledge Lake Storage. The instance assumes that you’ve already created digital networks and subnets as per the earlier instance.

Instance

# Variables

resourceGroup="YourResourceGroup"

databricksVnetName="DatabricksVNet"

databricksSubnetName="DatabricksSubnet"

databricksNSGName="DatabricksNSG"

storageVnetName="StorageVNet"

storageSubnetName="StorageSubnet"

# Create NSG for Azure Databricks

az community nsg create --resource-group $resourceGroup --name $databricksNSGName

# Enable site visitors from Databricks subnet to Storage subnet

az community nsg rule create

--resource-group $resourceGroup

--nsg-name $databricksNSGName

--name AllowStorageAccess

--priority 100

--source-address-prefixes $databricksVnetName

--destination-address-prefixes $storageVnetName

--destination-port-ranges 443

--direction Outbound

--access Enable

--protocol Tcp

# Affiliate NSG with Databricks subnet

az community vnet subnet replace

--resource-group $resourceGroup

--vnet-name $databricksVnetName

--name $databricksSubnetName

--network-security-group $databricksNSGName

Rationalization

-

Variables

- Substitute “YourResourceGroup” with the precise title of your Azure useful resource group.

- Use the names of the digital networks, subnets, and NSG that you simply created.

-

Create NSG for Azure Databricks

az community nsg create: Command to create a Community Safety Group for Azure Databricks.--resource-group: Specifies the Azure useful resource group.--name: Specifies the title of the NSG.

-

Enable Site visitors from Databricks Subnet to Storage Subnet

az community nsg rule create: Command to create a rule within the NSG permitting outbound site visitors from the Databricks subnet to the Storage subnet on port 443 (HTTPS).--priority: Specifies the precedence of the rule to make sure correct order of execution.--source-address-prefixes: Specifies the supply (Databricks subnet).--destination-address-prefixes: Specifies the vacation spot (Storage subnet).--destination-port-ranges: Specifies the port to permit site visitors to (443 for HTTPS).--direction: Specifies the route of site visitors (Outbound).--access: Specifies whether or not to permit or deny the site visitors (Enable).--protocol: Specifies the protocol for the rule (TCP).

-

Affiliate NSG with Databricks Subnet

az community vnet subnet replace: Command to affiliate the NSG with the Databricks subnet.

These instructions configure an NSG for Azure Databricks, create a rule to permit outbound site visitors to the Storage subnet, and affiliate the NSG with the Databricks subnet. Alter the parameters based mostly in your particular community configuration and safety necessities.

Implement a Non-public Hyperlink for Knowledge Lake Storage

- Create a non-public endpoint for Azure Knowledge Lake Storage to ascertain a safe connection.

- Implementing Non-public Hyperlink entails creating a non-public endpoint for Azure Knowledge Lake Storage. The next instance assumes you might have already created digital networks, subnets, and community safety teams (NSGs) as per the earlier examples.

Instance

# Variables

resourceGroup="YourResourceGroup"

datalakeAccountName="YourDataLakeAccount"

datalakeResourceId=$(az datalake retailer present --name $datalakeAccountName --resource-group $resourceGroup --query id --output tsv)

# Create non-public endpoint for Azure Knowledge Lake Storage

az community private-endpoint create

--resource-group $resourceGroup

--name StoragePrivateEndpoint

--vnet-name StorageVNet

--subnet StorageSubnet

--private-connection-resource-id $datalakeResourceId

--connection-name StoragePrivateConnection

--group-id blobRationalization

-

Variables

- Substitute “YourResourceGroup” with the precise title of your Azure useful resource group.

- Substitute “YourDataLakeAccount” with the title of your Azure Knowledge Lake Storage account.

- Acquire the Knowledge Lake Storage account’s useful resource ID utilizing the Azure CLI.

-

Create Non-public Endpoint

az community private-endpoint create: Command to create a non-public endpoint for Azure Knowledge Lake Storage.--resource-group: Specifies the Azure useful resource group.--name: Specifies the title of the non-public endpoint.--vnet-name: Specifies the title of the digital community.--subnet: Specifies the title of the subnet inside the digital community.--private-connection-resource-id: Specifies the useful resource ID of the Azure Knowledge Lake Storage account.--connection-name: Specifies the title of the non-public connection.--group-id: Specifies the service group ID for the non-public endpoint (on this case, “blob” for Azure Knowledge Lake Storage).

This command creates a non-public endpoint named “StoragePrivateEndpoint” within the “StorageVNet” digital community and associates it with the “StorageSubnet” subnet. The non-public endpoint is linked to the Azure Knowledge Lake Storage account utilizing its useful resource ID. Alter the parameters based mostly in your particular community configuration and Knowledge Lake Storage account particulars.

Keep in mind to adapt the instructions to your particular situation, together with selecting the suitable service group ID based mostly on the providers you need to entry by Non-public Hyperlink.

RBAC for Azure Databricks

- Implement RBAC for Azure Databricks, assigning roles based mostly on the precept of least privilege.

- To arrange Position-Primarily based Entry Management (RBAC) for Azure Databricks, you need to use Azure CLI instructions. RBAC means that you can outline and implement roles and permissions, making certain that customers and providers have the mandatory entry rights. The instance under assumes that you’ve already created a Databricks workspace and have the mandatory permissions.

Instance

# Variables

resourceGroup="YourResourceGroup"

databricksWorkspaceName="YourDatabricksWorkspace"

databricksObjectId=$(az advert signed-in-user present --query objectId --output tsv)

# Assign the Contributor position to the person (exchange 'Contributor' with the specified position)

az position project create

--assignee-object-id $databricksObjectId

--role Contributor

--scope /subscriptions/YourSubscriptionId/resourceGroups/$resourceGroup

# Retrieve the Databricks workspace ID

databricksWorkspaceId=$(az databricks workspace present

--resource-group $resourceGroup

--name $databricksWorkspaceName

--query id --output tsv)

# Assign the Azure Databricks SQL Admin position to the person for the workspace

az position project create

--assignee-object-id $databricksObjectId

--role "Azure Databricks SQL Admin"

--scope $databricksWorkspaceId

Rationalization

-

Variables

- Substitute “YourResourceGroup” with the precise title of your Azure useful resource group.

- Substitute “YourDatabricksWorkspace” with the title of your Azure Databricks workspace.

- Retrieve the article ID of the person, you need to assign roles (on this case, the signed-in person).

-

Assign the Contributor Position

az position project create: Command to assign a task (Contributor on this instance) to a person.--assignee-object-id: Specifies the article ID of the person.--role: Specifies the position to assign (e.g., Contributor).--scope: Specifies the scope of the project, on this case, the useful resource group.

-

Retrieve Databricks Workspace ID

az databricks workspace present: Command to retrieve details about the Databricks workspace.--resource-group: Specifies the Azure useful resource group.--name: Specifies the title of the Databricks workspace.--query id --output tsv: Extracts the workspace ID and outputs it in tab-separated values (TSV).

-

Assign Azure Databricks SQL Admin Position

az position project create: Command to assign the “Azure Databricks SQL Admin” position to the person for the Databricks workspace.--assignee-object-id: Specifies the article ID of the person.--role: Specifies the position to assign (“Azure Databricks SQL Admin”).--scope: Specifies the scope of the project, on this case, the Databricks workspace.

Alter the instructions based mostly in your particular necessities, equivalent to assigning totally different roles or scopes. Moreover, exchange “YourSubscriptionId” with the precise ID of your Azure subscription.

Securely Accessing Knowledge in Databricks

- Make the most of Azure Databricks secrets and techniques to securely retailer and entry credentials for Azure Knowledge Lake Storage.

- Implement safe coding practices within the machine studying code to deal with delicate data.

- Securely accessing knowledge in Azure Databricks entails utilizing safe coding practices, and one frequent option to entry knowledge is by leveraging secrets and techniques for authentication. On this instance, we’ll use Azure Databricks Secrets and techniques to securely retailer and retrieve credentials for Azure Knowledge Lake Storage. The instance assumes you’ve got arrange secrets and techniques in your Databricks workspace.

Instance (Python code snippet in a Databricks pocket book)

# Import obligatory libraries

from pyspark.sql import SparkSession

# Retrieve the key scope and secret key for Azure Knowledge Lake Storage

secret_scope = "YourSecretScope"

secret_key = "YourSecretKey"

# Retrieve the credentials from Databricks Secrets and techniques

storage_account_name = dbutils.secrets and techniques.get(scope=secret_scope, key=f"{secret_key}_StorageAccountName")

storage_account_key = dbutils.secrets and techniques.get(scope=secret_scope, key=f"{secret_key}_StorageAccountKey")

# Configure Spark session with Azure Storage settings

spark = SparkSession.builder

.appName("SecureAccessExample")

.config("fs.azure.account.key.{your_storage_account}.dfs.core.home windows.web", storage_account_key)

.getOrCreate()

# Instance: Learn knowledge from Azure Knowledge Lake Storage

data_lake_path = "abfss://yourcontainer@your_storage_account.dfs.core.home windows.web/path/to/knowledge"

df = spark.learn.csv(data_lake_path, header=True)

# Present the DataFrame

df.present()

Rationalization

-

Import Libraries

- Import the mandatory libraries, together with

SparkSessionfrompyspark.sql.

- Import the mandatory libraries, together with

-

Retrieve Secrets and techniques

- Use

dbutils.secrets and techniques.getto retrieve the credentials for Azure Knowledge Lake Storage from Databricks Secrets and techniques. - Substitute “YourSecretScope” and “YourSecretKey” with the precise secret scope and key you created in Databricks.

- Use

-

Configure Spark Session

- Create a Spark session with applicable configurations.

- Use the retrieved storage account title and key to configure Spark to entry Azure Knowledge Lake Storage.

- Substitute “{your_storage_account}” together with your precise storage account title.

-

Instance Knowledge Learn

- Outline the trail to the information in Azure Knowledge Lake Storage.

- Use the configured Spark session to learn knowledge from the required path right into a DataFrame.

-

Present DataFrame

- Show the contents of the information body as an illustration.

Be certain that you comply with finest practices for securely dealing with and managing secrets and techniques, equivalent to proscribing entry to secret scopes and keys. Moreover, you may additional improve safety by implementing fine-grained entry controls and encryption for knowledge at relaxation and in transit. Alter the code based on your particular knowledge supply, file format, and safety necessities.

Conclusion

Scaling Azure Databricks safe community entry to Azure Knowledge Lake Storage is a vital facet of constructing a strong and safe knowledge analytics setting. By following the excellent information and examples offered on this article, organizations can set up a safe basis for his or her large knowledge initiatives. Repeatedly reviewing and updating safety configurations, together with adhering to finest practices, will guarantee ongoing safety towards potential safety threats. Implementing these measures not solely safeguards delicate knowledge but in addition contributes to sustaining compliance and constructing belief within the more and more interconnected world of knowledge analytics.

Know extra about our firm at Skrots. Know extra about our providers at Skrots Providers, Additionally checkout all different blogs at Weblog at Skrots