Information Migration Utilizing Azure Pipelines

Introduction

Shifting information is vital in any enterprise or IT venture, particularly when switching to a brand new platform or enhancing current methods. Microsoft’s Energy Platform has helpful instruments for making this course of simpler, and the Energy Platform Construct Instruments are key to automating and simplifying it. This text seems to be into how these instruments are used, with a deal with creating schema information utilizing the Information Migration Utility.

Use Circumstances

- Automated Information Updates: Energy Platform Construct Instruments automate the updating course of, decreasing the probability of errors related to guide information entry. This ensures that correct and up-to-date data is obtainable to each inner stakeholders and clients.

- Deployment Processes: Automating this deployment course of is important to make sure consistency throughout a number of environments, resembling improvement, testing, and manufacturing. Energy Platform Construct Instruments facilitate the automation of the export and import of information. This use case highlights the effectivity positive aspects and reliability achieved by incorporating automation.

- Diminished dependency: Energy Platform Construct Instruments reduces dependency on Information Migration Utility or different softwares by automating no less than two main operations, i.e, export and import of information.

There are various different use circumstances as properly. For now, let’s talk about a step-by-step course of to implement this automation.

Step-by-Step Course of to Automate Information Migration

Earlier than we proceed we must always perceive 3 predominant operations in information migration in context of Energy Platform. First is creation of schema, this step is carried out manually utilizing Information Migration Utility the place we outline tables, filters and resolution from the place information must be exported. Second is Export from Supply, this step could be automated utilizing Energy Platform Construct Instruments the place information is exported from supply setting. Third is Import to Goal, on this step information is imported into goal setting(s).

Step 1. Create Schema File Utilizing Information Migration Utility

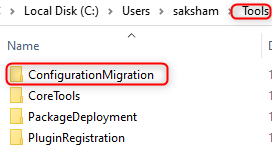

- Obtain the Configuration Migration Utility software from this hyperlink: Obtain Nuget Instruments.

- After profitable set up, the folder construction will look as proven beneath:

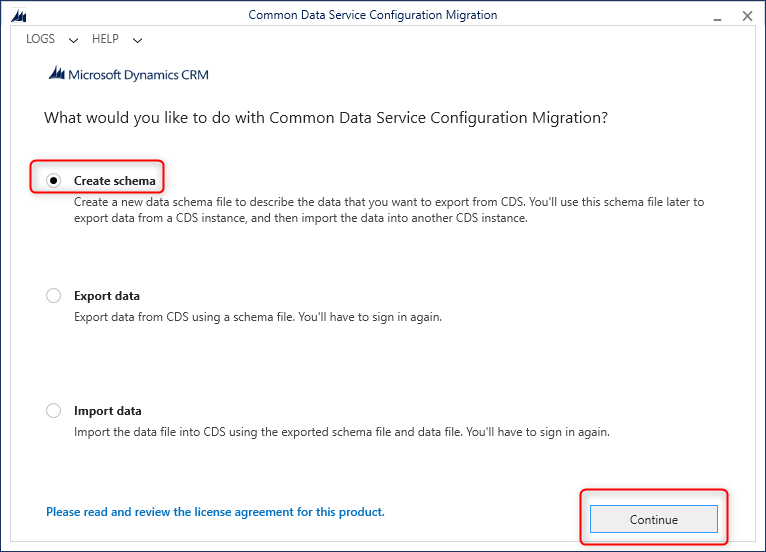

- In ConfigurationMigration folder, open DataMigrationUtility.exe file. A brand new window will open, choose Create Schema, and click on on Proceed as proven beneath.

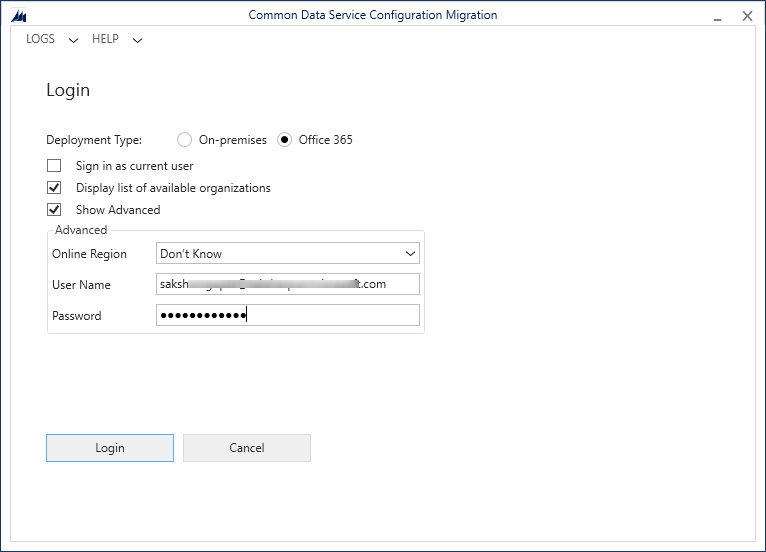

- Log in window will open, choose default settings as proven beneath and enter your setting’s username and password. Click on login to proceed additional.

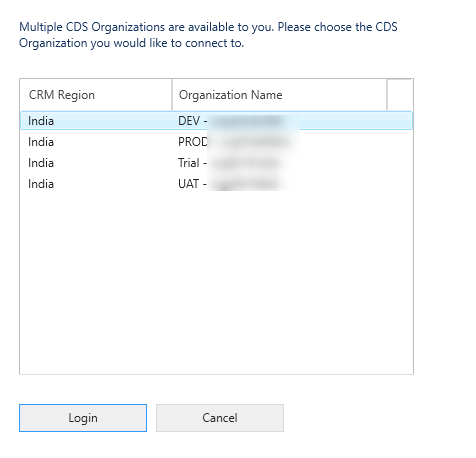

- Now choose supply setting from the place schema file must be generated as proven beneath and click on login.

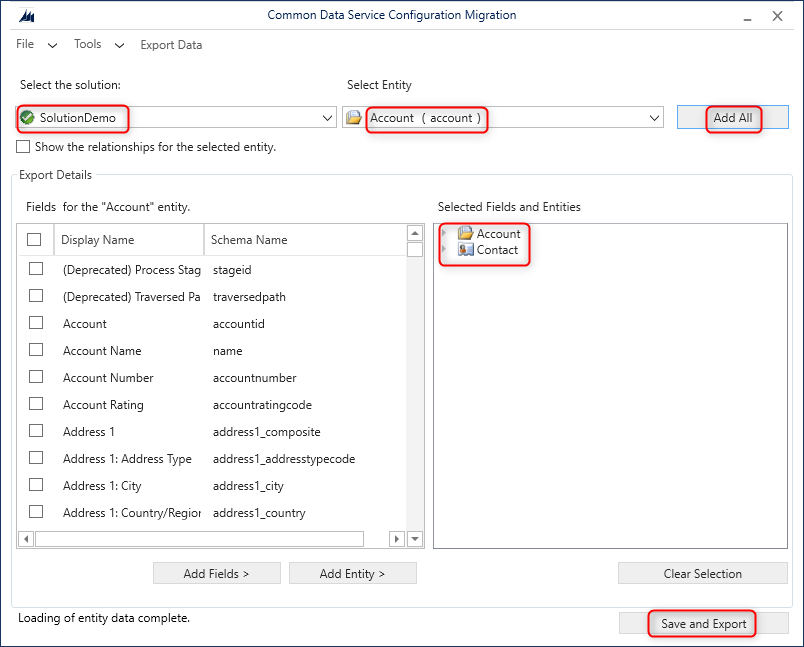

- In window proven beneath, first choose the answer which comprises the entities for which information must be exported.Second step is so as to add the entities for schema creation. In screenshot proven beneath, I’ve clicked on ‘Add All’, it can add all of the entities a part of chosen resolution. Now as soon as the entities are added click on on save and export. A pop-up wil seem asking for file identify and extension set to xml.

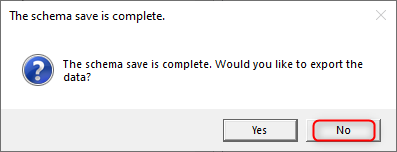

- As soon as the schema file is saved, a brand new pop-up will seem as proven beneath. Choose No, as we are going to carry out export and import from azure pipelines.

Step 2. Export information from supply setting utilizing schema file generated in Step1

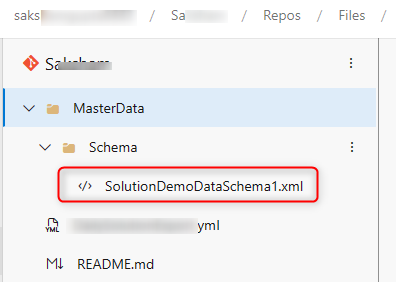

- Verify-in schema file in your repos so that very same could be referenced in our export information pipeline as proven beneath:

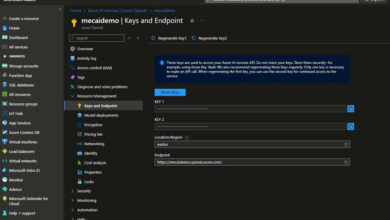

- Set up Energy Platform Construct Instruments and create a service connection. Comply with this text for step-by-step course of: Working with Service Connections in Azure DevOps (c-sharpcorner.com)

- Go to Azure Pipelines -> Create a brand new Starter Pipeline and replace it as given beneath:

# The set off part defines the situations that can set off the pipeline. On this case, 'none' means the pipeline will not be triggered robotically however manually. set off: - none # The pool part specifies the execution setting for the pipeline. On this case, it makes use of a Home windows VM picture. pool: vmImage: windows-latest jobs: - job: Data_Export_DEV displayName: Data_Export_DEV pool: vmImage: windows-latest # Overrides the pool outlined on the high stage for this particular job steps: #Energy Platform Software Installer will set up dependencies associated to energy platform duties. - process: PowerPlatformToolInstaller@2 inputs: DefaultVersion: true displayName: 'Energy Platform Software Installer ' # Energy Platform Export Information will export information from Dev setting primarily based on contents in schema file - process: PowerPlatformExportData@2 inputs: authenticationType: 'PowerPlatformSPN' PowerPlatformSPN: 'DEV' Surroundings: '$(BuildTools.EnvironmentUrl)' SchemaFile: 'MasterData/Schema/SolutionDemoDataSchema1.xml' DataFile: '$(Construct.SourcesDirectory)/MasterData/DataBackup/information.zip' Overwrite: true # Command line script will checkin information file into our Azure Repos. - process: CmdLine@2 displayName: Command Line Script inputs: script: | git config person.e-mail "Your Electronic mail Id" git config person.identify "Saksham Gupta" git checkout -b predominant git pull git add --all git commit -m "Information Backup" echo push code to new repo git -c http.extraheader="AUTHORIZATION: bearer $(System.AccessToken)" push origin predominant -f env: MY_ACCESS_TOKEN: $(System.AccessToken) # Set an setting variable with the worth of the System.AccessToken - Pre-requisite earlier than triggering the pipeline: Construct Service account ought to have contribute permission set to permit for predominant department.

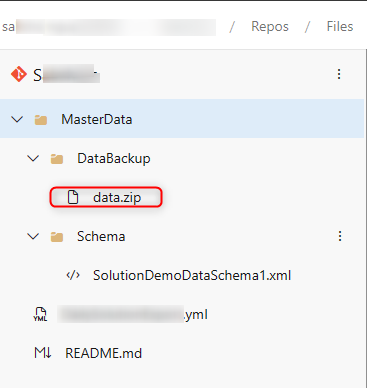

- Beneath is pattern output of folder construction after profitable pipeline run:

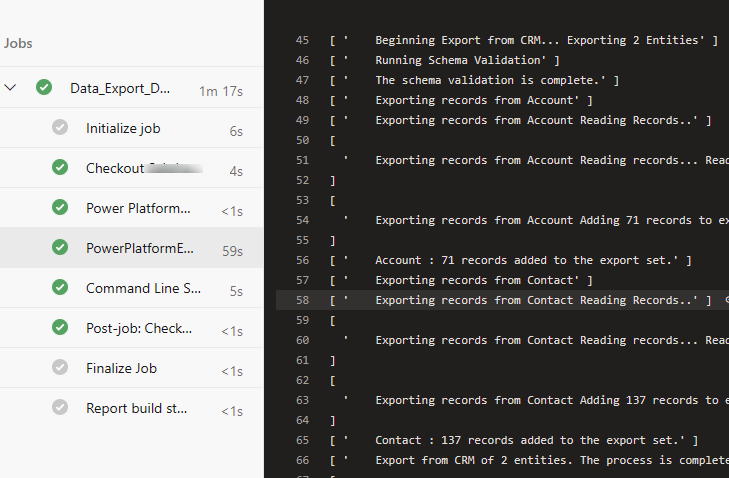

- Beneath is a profitable pipeline run for Information Export Pipeline:

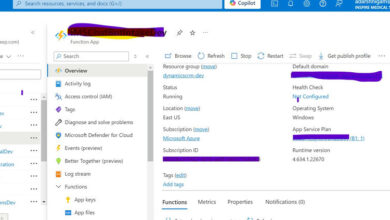

Step 3. Now let’s proceed with import of information in goal setting

- Pre-requisite: Goal setting ought to have all dependencies put in earlier than information is imported.

- Go to Azure Pipelines -> Create a brand new Starter Pipeline and replace it as given beneath:

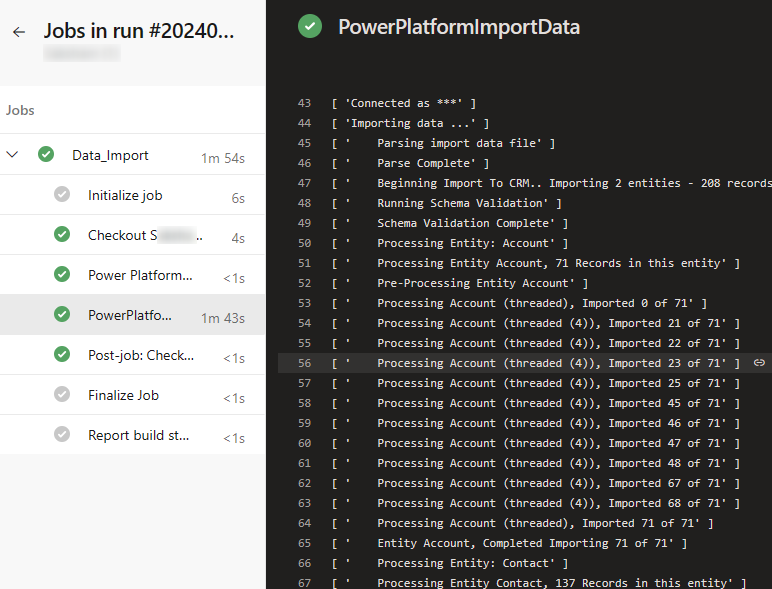

# Outline the set off for the pipeline. On this case, the pipeline has no express set off. set off: - none # Outline the pool configuration for the pipeline, specifying the digital machine picture for use. pool: vmImage: windows-latest jobs: - job: Data_Import displayName: Data_Import pool: vmImage: windows-latest steps: # This process installs the Energy Platform instruments - process: PowerPlatformToolInstaller@2 inputs: DefaultVersion: true displayName: 'Energy Platform Software Installer ' # This process imports information utilizing Energy Platform Import Information Activity into UAT setting. - process: PowerPlatformImportData@2 inputs: authenticationType: 'PowerPlatformSPN' PowerPlatformSPN: 'UAT' Surroundings: '$(BuildTools.EnvironmentUrl)' DataFile: 'MasterData/DataBackup/information.zip' #File path is picked from predominant department in azure repos. - Beneath is profitable pipeline run for Information import into UAT setting:

Conclusion

On this article we mentioned numerous use circumstances of automated Information migration in Energy Platform utilizing Azure Pipelines. This course of will save quite a lot of guide effort in addition to express monitoring. The step-by-step course of mentioned will show you how to get began with out advancedknowledge on YAML ideas. We will lengthen this course of additional by inculcating these duties in our ALM pipelines. Please attain out to me in caseany considerations.

Know extra about our firm at Skrots. Know extra about our providers at Skrots Companies, Additionally checkout all different blogs at Weblog at Skrots