Combine Azure AD OAuth2 SSO Authentication and RBAC for Kafka-UI

Earlier Article

Why does Kafka require a UI?

Kafka, a distributed streaming platform, has gained immense reputation resulting from its scalability, reliability, and fault tolerance. Nonetheless, managing and monitoring Kafka clusters may be advanced, particularly for large-scale deployments. To handle this problem, a number of Kafka UI instruments have emerged, simplifying cluster administration and offering precious insights into Kafka’s efficiency.

Understanding Kafka UI Instruments

Kafka UI instruments are web-based purposes that present a graphical interface for interacting with Kafka clusters. These instruments sometimes supply options comparable to,

- Cluster Administration: Creating, deleting, and configuring Kafka clusters.

- Subject Administration: Creating, deleting, and itemizing subjects.

- Shopper Group Administration: Viewing client group info, offsets, and lag.

- Message Inspection: Viewing and trying to find messages inside subjects.

- Efficiency Metrics: Monitoring key efficiency indicators (KPIs) like throughput, latency, and client lag.

- Safety Administration: Configuring authentication and authorization for Kafka entry.

Selecting the Proper Kafka UI Software

The perfect Kafka UI instrument in your wants is dependent upon components comparable to,

- Scale and complexity of your Kafka cluster: Bigger clusters might require extra superior options provided by instruments like Confluent Management Heart.

- Required options: Take into account the precise options you want, comparable to schema registry, KSQL, or Kafka Join.

- Price: Some instruments, like Confluent Management Heart, might have licensing prices related to them.

- Ease of use: If you happen to’re new to Kafka, a user-friendly interface like Kafka Supervisor is likely to be a sensible choice.

The Significance of Authentication and Authorization in Kafka UI Instruments

Apache Kafka has turn out to be a cornerstone for constructing real-time occasion streaming programs, enabling organizations to deal with huge quantities of information effectively. Nonetheless, Kafka’s inherent complexity and its crucial function in managing delicate knowledge pipelines make safety a high precedence. When utilizing a Kafka UI instrument—comparable to AKHQ, Kafka UI by Provectus, or Conductor—to simplify the administration and monitoring of Kafka clusters, authentication and authorization mechanisms turn out to be important for safeguarding the Kafka infrastructure from unauthorized entry and operations.

Kafka UI instruments, designed to simplify cluster administration, play an important function on this regard. Nonetheless, with out correct authentication and authorization mechanisms, these instruments can pose important safety dangers.

Why is Authentication Important?

- Stopping Unauthorized Entry: Authentication ensures that solely approved customers can entry the Kafka UI instrument. This prevents unauthorized people from gaining management over the cluster and doubtlessly compromising delicate knowledge.

- Figuring out Customers: Authentication permits the system to trace who’s accessing the cluster, making it simpler to audit and troubleshoot points.

- Imposing Insurance policies: Authentication can be utilized to implement entry insurance policies, comparable to limiting entry to sure options or limiting entry throughout particular instances.

Why Authorization is Important?

- Function-Primarily based Entry Management (RBAC): Authorization permits the implementation of RBAC, granting completely different customers or teams various ranges of entry to Kafka sources. This ensures that customers solely have the permissions essential to carry out their duties.

- Stopping Knowledge Breaches: By limiting entry to delicate knowledge, authorization helps forestall knowledge breaches and unauthorized knowledge manipulation.

- Enhancing Safety: Authorization may be mixed with different safety measures, comparable to encryption and firewalls, to create a extra sturdy safety posture.

Frequent Authentication and Authorization Strategies

- Username and Password: A easy however efficient methodology that requires customers to offer a sound username and password to realize entry.

- OAuth: A broadly used normal for authorization that enables customers to grant entry to purposes with out sharing their credentials.

- API Keys: A light-weight authentication mechanism that includes producing distinctive API keys for every consumer or software.

- Certificates: A safer methodology that makes use of digital certificates to confirm the identification of customers and purposes.

Finest Practices for Authentication and Authorization in Kafka UI Instruments

- Use Robust Authentication Strategies: Keep away from weak authentication strategies like default passwords or simply guessable usernames.

- Implement RBAC: Grant customers solely the permissions they should carry out their duties.

- Repeatedly Evaluate and Replace Entry Controls: As roles and duties change, be sure that entry controls are stored up-to-date.

- Allow Auditing: Monitor consumer exercise to determine potential safety threats.

- Educate Customers: Prepare customers on greatest practices for safety and password administration.

Provectus: A Versatile Kafka Administration Software

Provectus is a robust and intuitive web-based interface designed to simplify the administration and monitoring of Apache Kafka clusters. It affords a complete set of options, making it a precious instrument for each novices and skilled Kafka customers.

Key Options of Provectus

- Cluster Administration

- Create, delete, and configure Kafka clusters.

- Handle brokers, subjects, and client teams.

- Monitor cluster well being and efficiency metrics.

- Subject Administration

- Create, delete, and checklist subjects.

- View subject particulars, together with partitions, replicas, and retention settings.

- Produce and eat messages straight from the UI.

- Shopper Group Administration

- View client group info, offsets, and lag.

- Rebalance client teams.

- Monitor client efficiency and determine bottlenecks.

- Message Inspection

- View and seek for messages inside subjects.

- Filter messages primarily based on key, worth, or timestamp.

- Examine message headers and payloads.

- Efficiency Metrics

- Monitor key efficiency indicators (KPIs) like throughput, latency, and client lag.

- Visualize metrics utilizing charts and graphs.

- Determine efficiency points and optimize cluster configuration.

- Safety Administration

- Configure authentication and authorization for Kafka entry.

- Implement role-based entry management (RBAC).

- Shield delicate knowledge with encryption.

Advantages of Utilizing Provectus

- Simplified Administration: Provectus gives a user-friendly interface that simplifies advanced Kafka administration duties.

- Enhanced Visibility: Achieve deeper insights into your Kafka cluster’s well being and efficiency.

- Improved Effectivity: Streamline workflows and scale back guide duties.

- Centralized Management: Handle a number of Kafka clusters from a single console.

- Price-Efficient: Provectus is a free and open-source instrument.

Set up and Configuration

Provectus may be put in on varied working programs, together with Linux, macOS, and Home windows. The set up course of sometimes includes cloning the repository from GitHub, putting in dependencies, and working the applying. Configuration choices assist you to customise Provectus to your particular wants.

Configuring Provectus Kafka-UI utilizing Docker-Compose with out Authentication.

providers:

kafka-ui:

container_name: provectus_kafka_ui

picture: provectuslabs/kafka-ui:newest

ports:

- 9095:8080

setting:

DYNAMIC_CONFIG_ENABLED: 'true'

Creates a docker-compose.yaml file and previous the above code snippet. Hooked up ‘docker-compose-KafkaUIOnly.yaml’ file for reference.

Open PowerShell, navigate to the file location, and execute ‘docker-compose up’.

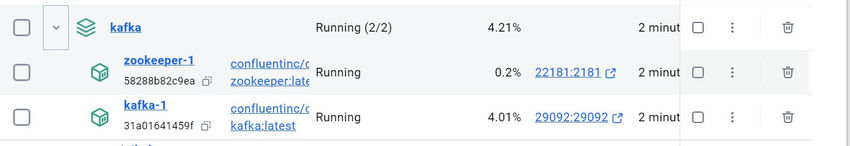

A Docker picture can be working on the Docker Desktop.

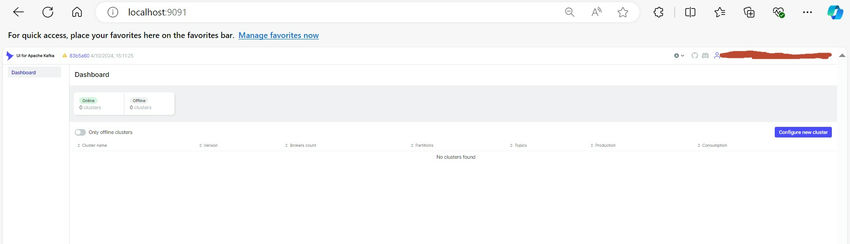

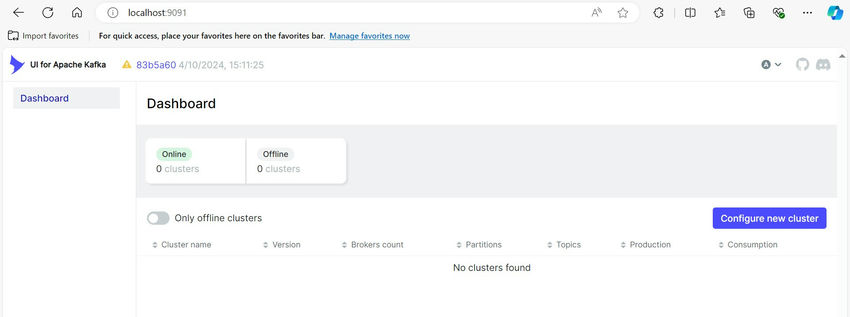

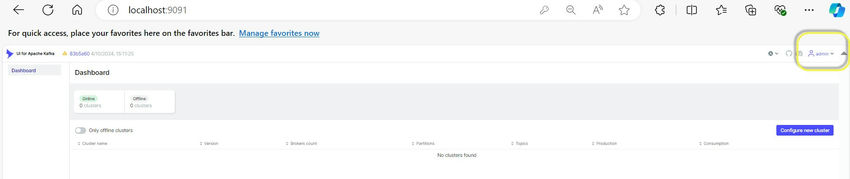

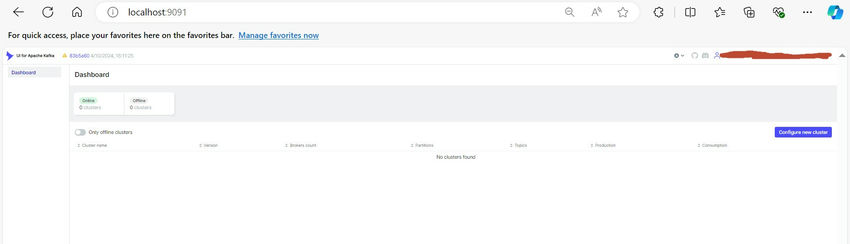

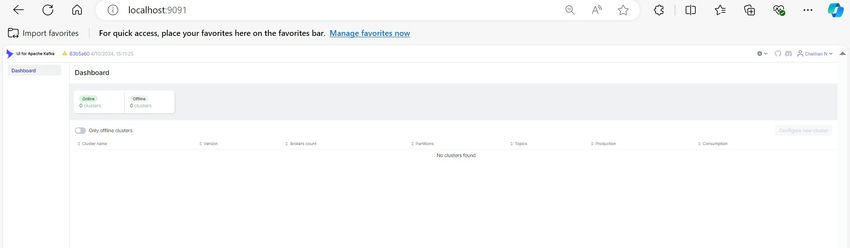

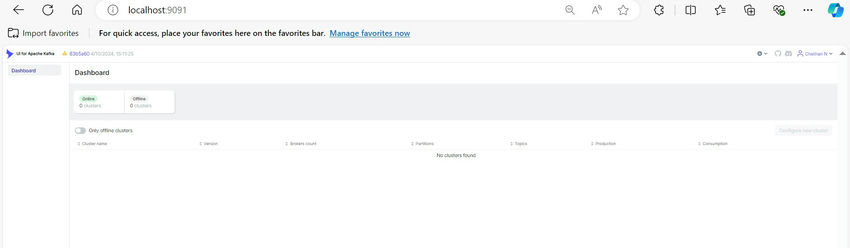

Browse with ‘http://localhost:9091/’ and Kafka-ui can be displayed.

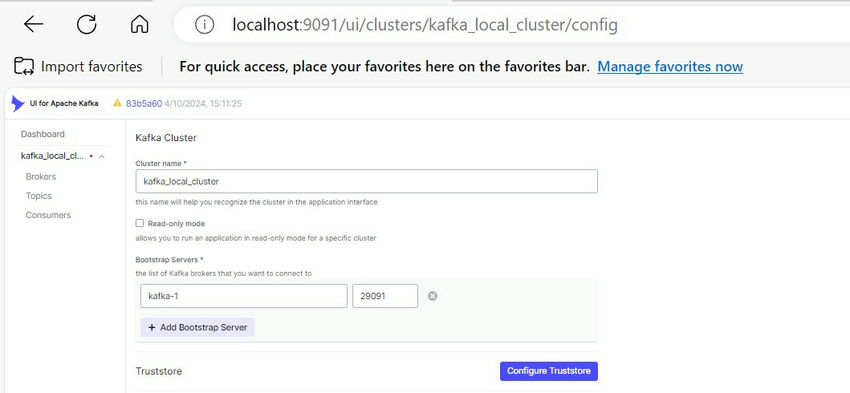

To configure the brand new Kafka cluster, click on on Configure new Cluster and add the cluster title and Bootstrap server with port.

There’s a Kafka occasion that’s working at my native.

Will add the Kafka cluster particulars and Submit.

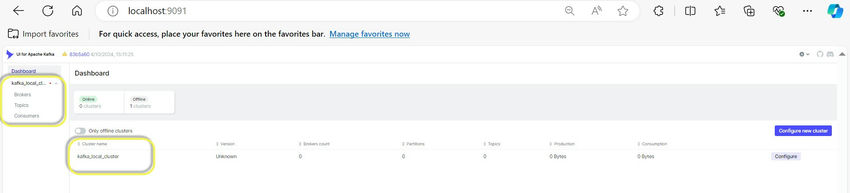

As soon as Cluster is added efficiently, we are able to see the main points within the Dashboard and we are able to navigate to completely different choices to view/modify Brokers, Matters, and Shoppers.

Configuring Provectus Kafka-UI utilizing Docker-Compose with Primary Authentication.

providers:

kafka-ui:

container_name: provectus_kafka_ui

picture: provectuslabs/kafka-ui:newest

ports:

- 9091:8080

setting:

DYNAMIC_CONFIG_ENABLED: 'true'

AUTH_TYPE: "LOGIN_FORM"

SPRING_SECURITY_USER_NAME: admin

SPRING_SECURITY_USER_PASSWORD: cross

Hooked up ‘docker-compose-BasicAuth.yaml’ file for reference.

Comply with related steps as above, the place the docker-compose file must be up and working on the docker desktop. Within the docker-compose file, we are able to see the setting variables like AUTH_TYPE, which specifies LOGIN_FORM, which is nothing however Primary authentication, and different variables like SPRING_SECURITY_USER_NAME and SPRING_SECURITY_USER_PASSWORD for username and password.

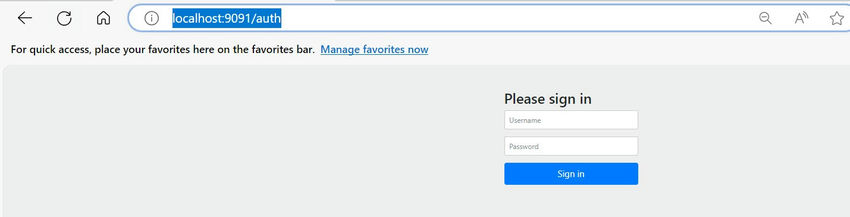

Once we browse with ”http://localhost:9091/’, we’ll get a login web page to enter consumer credentials.

As that is for Primary Authentication, username and password are configured within the docker-compose file solely. Enter admin for consumer title and cross for password, and the consumer can be logged in to Provectus Kafka UI.

Configuring Provectus Kafka-UI utilizing Docker-Compose with Azure AD/OAuth2/SSO Authentication.

Configuring Single Signal-On (SSO) with Azure Lively Listing (Azure AD) for Kafka UI by Provectus includes a number of steps.

- Registering an Utility in Azure AD

- Configuring Kafka UI to Use Azure AD for Authentication

Step 1. Register an Utility in Azure Lively Listing.

First, you could register your Kafka UI software with Azure AD to acquire the required credentials for OAuth 2.0 authentication.

1.1. Check in to the Azure Portal.

- Navigate to Azure Portal and sign up together with your administrator account.

1.2. Register a New Utility.

- Within the Azure Portal, choose Azure Lively Listing from the left-hand navigation pane.

- Within the Azure AD web page, choose App registrations from the menu.

- Click on on New Registration.

- Configure the applying registration.

- Title: Enter a reputation in your software (e.g., Kafka UI SSO).

- Supported account sorts: Select Accounts on this organizational listing solely if you need solely customers in your group to entry Kafka UI.

- Redirect URI: Set the redirect URI to http://localhost:9091/login/oauth2/code/ (you’ll alter this later if wanted).

- Click on Register to create the applying.

1.3. Configure Authentication Settings

- After registration, you may be redirected to the applying’s Overview web page.

- Choose Authentication from the left-hand menu.

- Below Platform Configurations, click on Add a Platform and choose Internet.

- Configure Internet Platform

- Redirect URIs: Add the redirect URI the place Kafka UI will obtain authentication responses. For instance: http://localhost:9091/login/oauth2/code/. Be certain that this URI matches the SERVER_SERVLET_CONTEXT_PATH (if set) and the port mapping in your Docker Compose file.

- Logout URL (elective): You’ll be able to specify a logout URL if wanted.

- Implicit grant and hybrid flows: Verify Entry tokens and ID tokens to allow them.

- Click on Configure to avoid wasting the settings.

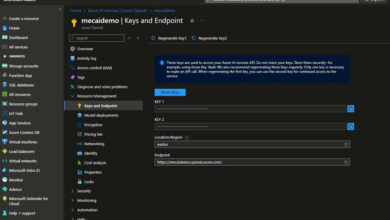

1.4. Create a Shopper Secret.

- On the applying web page, choose Certificates & secrets and techniques.

- Below Shopper Secrets and techniques, click on New Shopper Secrets and techniques.

- Add a consumer’s secret.

- Description: Enter an outline (e.g., Kafka UI Shopper Secret).

- Expires: Choose an acceptable expiration interval.

- Click on Add.

- Copy the Shopper’s Secret: After creating the key, copy the Worth instantly. You will have this worth later, and it’ll not be proven once more.

1.5. Collect Obligatory Data

Make a remark of the next info.

- Utility (consumer) ID: Discovered on the applying’s Overview web page.

- Listing (tenant) ID: Additionally discovered on the Overview web page.

- Shopper Secret: The worth you copied within the earlier step.

Step 2. Configure Kafka UI to Use Azure AD for Authentication.

Now that you’ve got the required credentials, you’ll be able to configure Kafka UI to make use of Azure AD for authentication.

2.1. Replace the Docker Compose File.

Modify your docker-compose.yml file to incorporate the OAuth 2.0 configuration.

model: '3'

providers:

kafka-ui:

container_name: kafka-ui-provectus

picture: 'provectuslabs/kafka-ui:newest'

ports:

- "9091:8080"

setting:

DYNAMIC_CONFIG_ENABLED: 'true'

AUTH_TYPE: "OAUTH2"

AUTH_OAUTH2_CLIENT_AZURE_CLIENTID: "**Add Azure ClientID**"

AUTH_OAUTH2_CLIENT_AZURE_CLIENTSECRET: "**Add Azure Shopper Secret**"

AUTH_OAUTH2_CLIENT_AZURE_SCOPE: "openid"

AUTH_OAUTH2_CLIENT_AZURE_CLIENTNAME: "azure"

AUTH_OAUTH2_CLIENT_AZURE_PROVIDER: "azure"

AUTH_OAUTH2_CLIENT_REGISTRATION_AZURE_REDIRECT_URI: "{baseUrl}/login/oauth2/code/{registrationId}"

AUTH_OAUTH2_CLIENT_AZURE_ISSUERURI: "https://login.microsoftonline.com/{**TenantID**}/v2.0"

AUTH_OAUTH2_CLIENT_AZURE_JWKSETURI: "https://login.microsoftonline.com/{**TenantID**}/discovery/v2.0/keys"

Hooked up is ‘docker-compose-WithAzureAD Authonly.yaml’ for reference.

2.2. Substitute Placeholders with Your Values.

- your-client-id: Substitute with the Utility (consumer) ID from Azure AD.

- your-client-secret: Substitute with the Shopper Secret you created.

- your-tenant-id: Substitute together with your Listing (tenant) ID.

Step 3. Confirm the Configuration.

3.1. Entry Kafka UI.

Open your browser and navigate to http://localhost:9091 (or the suitable URL primarily based in your configuration).

3.2. Signal In with Azure AD.

- You need to be redirected to the Azure AD login web page.

- Enter your Azure AD credentials to sign up.

- Upon profitable authentication, you may be redirected again to Kafka UI and have entry to the interface.

Configuring Provectus Kafka-UI utilizing Docker-Compose with Azure AD/OAuth2/SSO Authentication and RBAC for Authorization.

To configure Azure AD, comply with the identical steps talked about above and proceed to configure RBAC from right here.

Proscribing Entry to Particular Customers or Teams

- By default, any consumer in your Azure AD tenant can authenticate.

- You’ll be able to limit entry to particular customers or teams.

- Use Azure AD Group Claims to incorporate group info within the ID token.

- Configure Function-Primarily based Entry Management (RBAC) in Kafka UI if supported.

- Modify your software to test for particular roles or group membership.

On this method, authentication and authorization particulars are current in config.yaml file. To include a config.yaml file into your Docker Compose setup for Kafka-UI, you will have to comply with these steps:

You’ll be able to manage your mission folder like this,

project-directory/

│

├── docker-compose.yml

├── config/

│ └── config.yaml

This construction contains.

- docker-compose.yml: The Docker Compose file you have shared.

- config/config.yaml: The configuration file for kafka-ui.

Content material of config.yaml

auth:

sort: OAUTH2

oauth2:

consumer:

azure:

clientId: "clientId"

clientSecret: "ClientSecret"

scope: openid

client-name: azure

supplier: azure

authorization-grant-type: authorization_code

issuer-uri: "https://login.microsoftonline.com/{TenantID}/v2.0"

jwk-set-uri: "https://login.microsoftonline.com/{TenantID}/discovery/v2.0/keys"

user-name-attribute: title # electronic mail

custom-params:

sort: oauth

roles-field: roles

rbac:

roles:

- title: "admins"

clusters:

- cluster 1

topics:

- supplier: oauth

sort: function

worth: "UIAdmin"

permissions:

- useful resource: applicationconfig

actions: [ VIEW, EDIT ]

- useful resource: clusterconfig

actions: [ VIEW, EDIT ]

- useful resource: subject

worth: ".*"

actions:

- VIEW

- CREATE

- EDIT

- DELETE

- MESSAGES_READ

- MESSAGES_PRODUCE

- MESSAGES_DELETE

- useful resource: client

worth: ".*"

actions: [ VIEW, DELETE, RESET_OFFSETS ]

- useful resource: schema

worth: ".*"

actions: [ VIEW, CREATE, DELETE, EDIT, MODIFY_GLOBAL_COMPATIBILITY ]

- useful resource: join

worth: ".*"

actions: [ VIEW, EDIT, CREATE, RESTART ]

- useful resource: ksql

actions: [ EXECUTE ]

- useful resource: acl

actions: [ VIEW, EDIT ]

- title: "readonly"

clusters:

- cluster 1

topics:

- supplier: oauth

sort: function

worth: "Viewer"

permissions:

- useful resource: clusterconfig

actions: [ VIEW ]

- useful resource: subject

worth: ".*"

actions:

- VIEW

- CREATE

- EDIT

- DELETE

- MESSAGES_READ

- MESSAGES_PRODUCE

- MESSAGES_DELETE

- useful resource: client

worth: ".*"

actions: [ VIEW, DELETE, RESET_OFFSETS ]

- useful resource: schema

worth: ".*"

actions: [ VIEW, CREATE, DELETE, EDIT, MODIFY_GLOBAL_COMPATIBILITY ]

- useful resource: join

worth: ".*"

actions: [ VIEW, EDIT, CREATE, RESTART ]

- useful resource: ksql

actions: [ EXECUTE ]

- useful resource: acl

actions: [ VIEW, EDIT ]

Modify docker-compose.yml to Mount config.yaml.

Within the docker-compose.yml file, you will have to mount the config.yaml file as a quantity into the Kafka-ui container. volumes: This line mounts the native config/config.yaml file into the container at /config.yaml.

model: '3'

providers:

kafka-ui:

container_name: kafka-ui-provectus

picture: 'provectuslabs/kafka-ui:newest'

ports:

- "9091:8080"

setting:

DYNAMIC_CONFIG_ENABLED: 'true'

LOGGING_LEVEL_ROOT: DEBUG

LOGGING_LEVEL_REACTOR: DEBUG

SPRING_CONFIG_ADDITIONAL-LOCATION: /config.yaml

volumes:

- ./config/config.yaml:/config.yaml

Hooked up ‘docker-compose-withAzureAD_RBAC.yaml’ and config.yaml file contained in the config folder.

To execute each the docker-compose.yml and config.yaml, Navigate to your mission listing (the place docker-compose.yml is situated). Run Docker Compose.

Configure Azure AD authentication and Function-Primarily based Entry Management (RBAC) for Provectus Kafka-UI utilizing Helm.

Why Use Helm over Docker Compose for Kafka-UI?

In case your infrastructure relies on Kubernetes, Helm is the pure alternative. It’s designed to work with Kubernetes, making it simpler to handle advanced deployments and providers like Kafka, particularly in cloud environments.

- Helm permits higher scalability and reliability resulting from Kubernetes’ native options for clustering, scaling, and self-healing. That is essential for manufacturing environments and enormous Kafka clusters.

- Helm Charts make it simpler to handle environment-specific configurations and secrets and techniques, which is useful when working with advanced configurations like Kafka, SSL, RBAC, and OAuth2.

- Helm’s built-in versioning and rollback options make it extra fitted to managing Kafka-UI in manufacturing, the place it’s possible you’ll have to deploy updates ceaselessly or revert adjustments.

- Hooked up is a values file named values-AzureAD_rbac_withHELM.yaml, which comprises Azure AD and RBAC configurations utilizing Helm.

To configure Azure AD authentication and Function-Primarily based Entry Management (RBAC) for Provectus Kafka-UI utilizing Helm, you will have to comply with these steps.

Conditions

- Azure AD software setup with OAuth2 credentials (client-id, client-secret, tenant-id, and so on.).

- Kafka-UI Helm repository added to your native setup.

- Reference Provectus_HelmChart

In Powershell execute beneath instructions to put in Helm Repo.

helm repo add kafka-ui https://provectus.github.io/kafka-ui-charts

Put together Helm Values File Code current in values.yaml.

replicaCount: 1

picture:

registry: docker.io

repository: provectuslabs/kafka-ui

pullPolicy: IfNotPresent

# Overrides the picture tag whose default is the chart appVersion.

tag: ""

imagePullSecrets: []

nameOverride: ""

fullnameOverride: ""

serviceAccount:

# Specifies whether or not a service account must be created

create: true

# Annotations so as to add to the service account

annotations: {}

# The title of the service account to make use of.

# If not set and create is true, a reputation is generated utilizing the fullname template

title: ""

existingConfigMap: ""

yamlApplicationConfig:

# kafka:

# clusters:

# - title: Reimbursement

# bootstrapServers: kafka-service:9092

spring:

safety:

auth:

sort: OAUTH2

oauth2:

consumer:

azure:

clientId: "ClientID"

clientSecret: "ClientSecret"

scope: openid

client-name: azure

supplier: azure

authorization-grant-type: authorization_code

issuer-uri: "https://login.microsoftonline.com/{TenantID}/v2.0"

jwk-set-uri: "https://login.microsoftonline.com/{TenantID}/discovery/v2.0/keys"

user-name-attribute: title

custom-params:

sort: oauth

roles-field: roles

rbac:

roles:

- title: "admins" # Function 1

clusters: # want so as to add cluster title that's going to create to view the cluster/subject/message

- Cluster 1

- Cluster 2

topics:

- supplier: oauth

sort: function

worth: "UIAdmin"

permissions:

- useful resource: applicationconfig

actions: [ VIEW, EDIT ]

- useful resource: clusterconfig

actions: [ VIEW, EDIT ]

- useful resource: subject

worth: ".*"

actions:

- VIEW

- CREATE

- EDIT

- DELETE

- MESSAGES_READ

- MESSAGES_PRODUCE

- MESSAGES_DELETE

- useful resource: client

worth: ".*"

actions: [ VIEW, DELETE, RESET_OFFSETS ]

- useful resource: schema

worth: ".*"

actions: [ VIEW, CREATE, DELETE, EDIT, MODIFY_GLOBAL_COMPATIBILITY ]

- useful resource: join

worth: ".*"

actions: [ VIEW, EDIT, CREATE, RESTART ]

- useful resource: ksql

actions: [ EXECUTE ]

- useful resource: acl

actions: [ VIEW, EDIT ]

- title: "readonly" # Function 2

clusters: # want so as to add cluster title that's going to create to view the cluster/subject/message

- Cluster 1

- Cluster 2

topics:

- supplier: oauth

sort: function

worth: "Viewer"

permissions:

- useful resource: clusterconfig

actions: [ VIEW ]

- useful resource: subject

worth: ".*"

actions:

- VIEW

- CREATE

- EDIT

- DELETE

- MESSAGES_READ

- MESSAGES_PRODUCE

- MESSAGES_DELETE

- useful resource: client

worth: ".*"

actions: [ VIEW, DELETE, RESET_OFFSETS ]

- useful resource: schema

worth: ".*"

actions: [ VIEW, CREATE, DELETE, EDIT, MODIFY_GLOBAL_COMPATIBILITY ]

- useful resource: join

worth: ".*"

actions: [ VIEW, EDIT, CREATE, RESTART ]

- useful resource: ksql

actions: [ EXECUTE ]

- useful resource: acl

actions: [ VIEW, EDIT ]

yamlApplicationConfigConfigMap:

{}

# keyName: config.yml

# title: configMapName

existingSecret: ""

envs:

secret: {}

config: {}

networkPolicy:

enabled: false

egressRules:

## Further {custom} egress guidelines

customRules: []

ingressRules:

## Further {custom} ingress guidelines

customRules: []

podAnnotations: {}

podLabels: {}

annotations: {}

probes:

useHttpsScheme: false

podSecurityContext:

{}

# fsGroup: 2000

securityContext:

{}

# capabilities:

# drop:

# - ALL

# readOnlyRootFilesystem: true

# runAsNonRoot: true

# runAsUser: 1000

service:

sort: ClusterIP

port: 80

ingress:

enabled: false

annotations: {}

ingressClassName: ""

path: "/"

pathType: "Prefix"

host: ""

tls:

enabled: false

secretName: ""

sources:

{}

# limits:

# cpu: 200m

# reminiscence: 512Mi

# requests:

# cpu: 200m

# reminiscence: 256Mi

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 100

targetCPUUtilizationPercentage: 80

# targetMemoryUtilizationPercentage: 80

nodeSelector: {}

tolerations: []

affinity: {}

env:

- title: DYNAMIC_CONFIG_ENABLED

worth: 'true'

- title: LOGGING_LEVEL_ROOT

worth: DEBUG

- title: LOGGING_LEVEL_REACTOR

worth: DEBUG

initContainers: {}

volumeMounts: {}

volumes: {}

Set up Kafka-UI Utilizing Helm: Navigate to the Folder the place the values.yaml information are saved and execute the beneath command to configure the Provectus picture to obtain into the Kubernetes cluster and configure Azure AD and RBAC.

helm set up kafka-ui kafka-ui/kafka-ui -f values.yaml

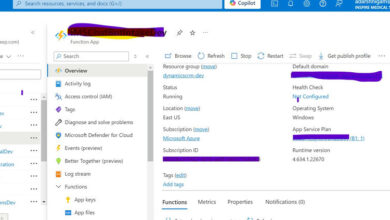

Entry to your Kubernetes cluster with Helm put in, by port forwarding.

Execute the beneath command to test the Pod is created and working.

kubectl get pods

Use the beneath command to do port-forwarding.

kubectl port-forward --namespace default $POD_NAME 9091:8080

Browse with ‘http://localhost:9091/’

Referance

Conclusion

Kafka UI instruments are important for successfully managing and monitoring Kafka clusters. By offering a graphical interface, these instruments simplify duties that might in any other case be advanced and time-consuming. Whether or not you are a Kafka newbie or an skilled administrator, a well-chosen Kafka UI instrument can vastly improve your productiveness and effectivity.

Provectus is a precious instrument for anybody working with Apache Kafka. Its intuitive interface, complete options, and open-source nature make it a preferred alternative amongst builders and directors. Through the use of Provectus, you’ll be able to successfully handle your Kafka clusters, optimize efficiency, and guarantee knowledge reliability.

By implementing sturdy authentication and authorization mechanisms, organizations can defend their Kafka clusters from unauthorized entry and knowledge breaches. This not solely safeguards delicate info but in addition ensures the reliability and integrity of the streaming platform.

Know extra about our firm at Skrots. Know extra about our providers at Skrots Companies, Additionally checkout all different blogs at Weblog at Skrots