Dealing with Reside Knowledge Feed Utilizing Azure Stream Analytics Job

Azure Occasion hubs are the most effective technique for ingesting occasions in steam analytics. The demo software generates occasions and sends them to an occasion hub. If you’re new to occasion hubs, please discuss with my earlier article about it.

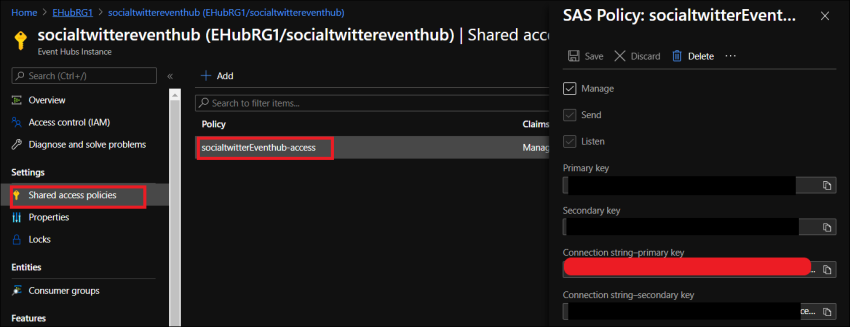

Subsequent, you’ll have to create an Occasion Hub namespace and occasion hub. Since there are tons of sources accessible on the net on how one can carry out this, I’m going to skip you displaying steps to create a namespace and occasion hub. Be sure to observe down the connection string from shared entry insurance policies -highlighted within the under snapshot.

Provoke the Twitter shopper software

For the appliance to obtain the tweet occasions straight, it wants permission to name on the Twitter streaming APIs. We’ll stroll by way of that within the following steps,

Configuring our software

For the sake of simplicity, I can’t be explaining how one can create a Twitter app right here, one factor to notice is you must copy the buyer API secret key and client API key as soon as whenever you create the app.

Earlier than beginning our software which collects tweets utilizing Twitter API a few matter, now we have to supply sure data together with conn keys and Occasion hub conn strings.

- Obtain TwitterClientcore software from GitHub save in a neighborhood folder

- Edit the app.config file within the notepad and alter the appsettings to fit your connection string values

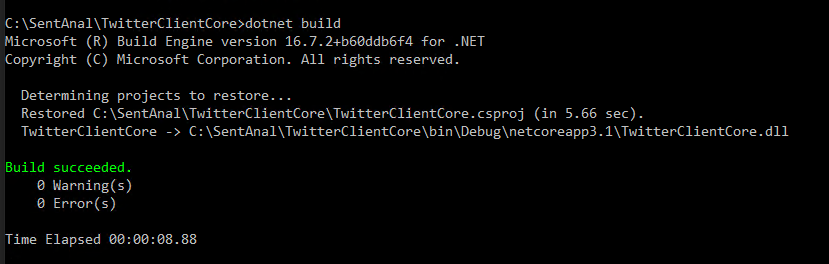

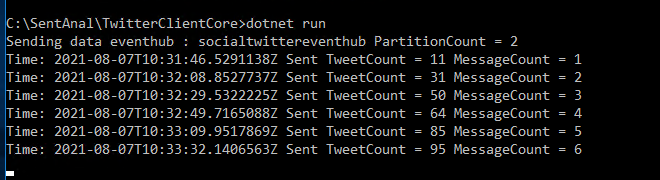

- After finishing the above modifications go to the listing the place the Twitterclientcore app is saved. Now construct the undertaking utilizing ‘dotnet construct’ command put up which begins the app utilizing the command ‘dotnet run’ after which now you can see the app begins sending tweets to your occasion hub.

Obtain dotnet core SDK from the Microsoft web site and set up it in your system. I downloaded the .NET core 3.1 SDK installer from right here.

dotnet construct

dotnet run

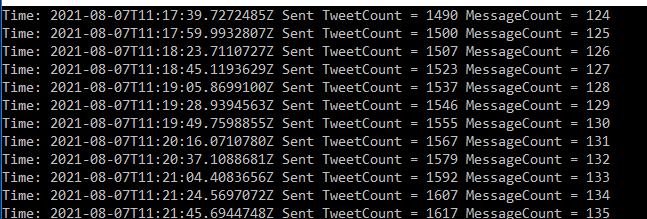

Now that we might see the app is sending tweets into the eventhub efficiently, we are able to proceed with setting upstream analytics.

Stream Analytics

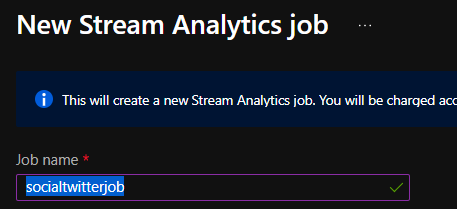

Create a brand new ‘Stream analytics Job’ useful resource in your respective useful resource group.

Create Enter

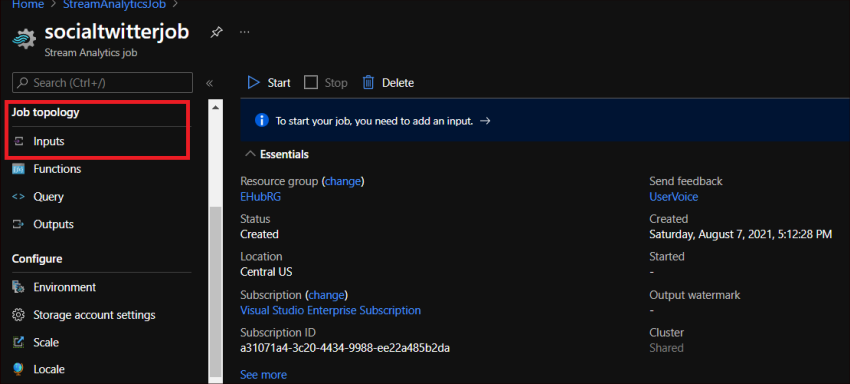

As soon as created whenever you go to your useful resource you would see it’s at present in a ‘Stopped’ state which is regular. The job will be began solely after correct inputs/outputs have been configured which we’re going to carry out now.

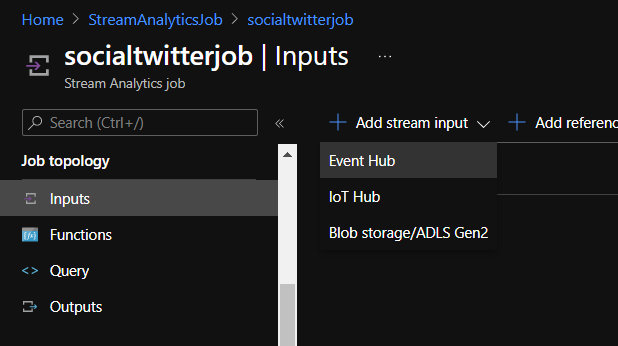

In our newly created stream analytics job, from the left menu choose ‘Inputs’ below the class ‘Job Topology’.

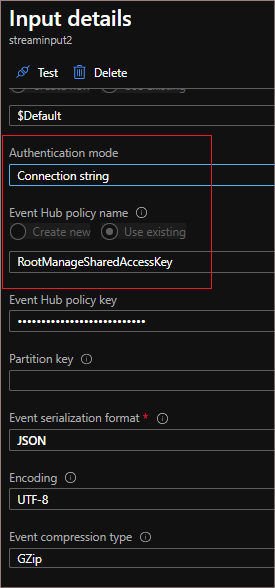

Should you face any connection take a look at failure when creating this be sure to have chosen ‘Connecting string’ as auth mode with the next choices.

It is now time to create the enter question. Go to overview and click on the edit button on the question field under,

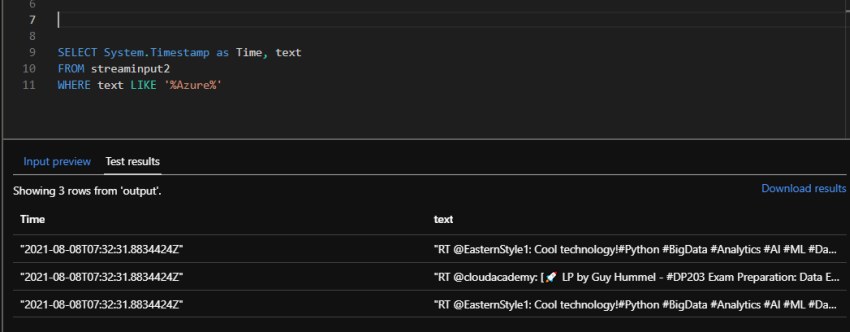

For testing I’m offering it with my easy question “choose * from streaminput2” -remember streaminput2 is the identify now we have given whereas creating the inputs of the stream analytics job. After getting typed the question you possibly can see the output within the ‘enter preview’ pane under.

You too can click on the take a look at question button with a distinct question and see the take a look at leads to the subsequent pane under.

Create Output

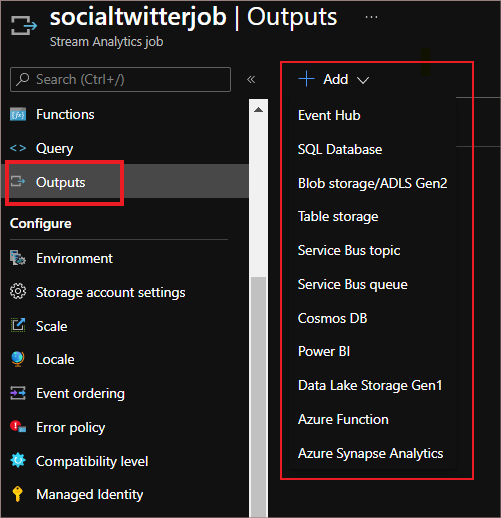

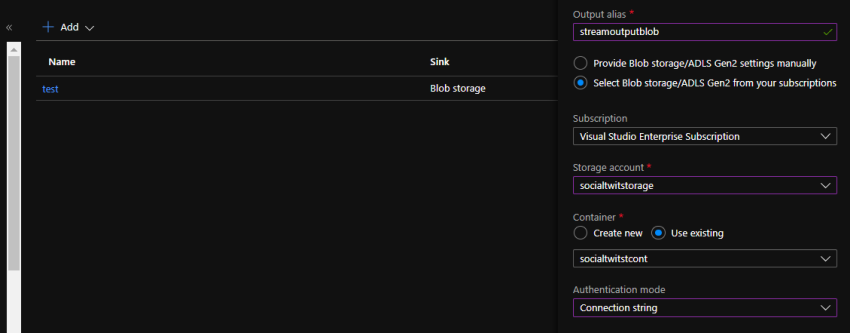

To date now we have created an occasion stream, occasion enter, and question to remodel the incoming knowledge over the occasion streams. One remaining step is to configure the outputs for the stream analytics job.

We are able to push the outcomes to Azure SQL database, Azure BLOB Storage, PowerBI dashboard, Azure desk storage, and even to Occasion Hubs, and so on., based mostly on one’s want. Right here I’ll demo pushing to Azure BLOB.

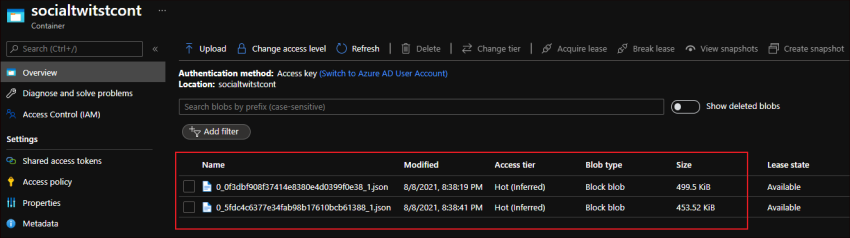

Now as now we have efficiently configured Enter, Question, and Output, we are able to go forward and begin the Stream Analytics Job. Earlier than beginning you must be sure the Twitter shopper software which we began earlier is working.

We might see the info is getting saved as JSON recordsdata in blob storage, we might see the scale of the recordsdata getting elevated progressively as soon as if we refresh which proves the info that’s getting saved.

Abstract

Thanks for staying this lengthy for studying this text. I might at all times wish to have it quick however given that is an Finish-to-Finish undertaking it took some time -kudos to you all!

What subsequent?

We have now launched ourselves to Azure Stream Analytics by way of which we are able to seize dwell stream knowledge to blob storage simply. Sooner or later, we’ll see how one can save dwell feed knowledge into an SQL database after which create a real-time PowerBI dashboard from a dwell Twitter feed which I imagine might be attention-grabbing subjects.

References

Microsoft official azure stream analytics documentation