Azure Information Manufacturing unit (ADF) Overview For Freshmen

What’s Azure Information Manufacturing unit?

Azure Information Manufacturing unit is a managed cloud service that is constructed for these complicated hybrid extract-transform-load (ETL), extract-load-transform (ELT), and information integration tasks.

Why to Select ADF over on-prem SSIS bundle?

So, each time anybody hear Azure information manufacturing unit ETL, ELT packages, first query will hit our mind is why to not use on-prem SSIS packages to attain similar. Proper? however ADF will not be a substitute for SSIS as an alternative these are each ETL instruments with some quantity of shared performance, they’re separate merchandise, every with its personal strengths and weaknesses.

So, listed below are few necessary issues which can assist us to grasp advantages of ADF over SSIS.

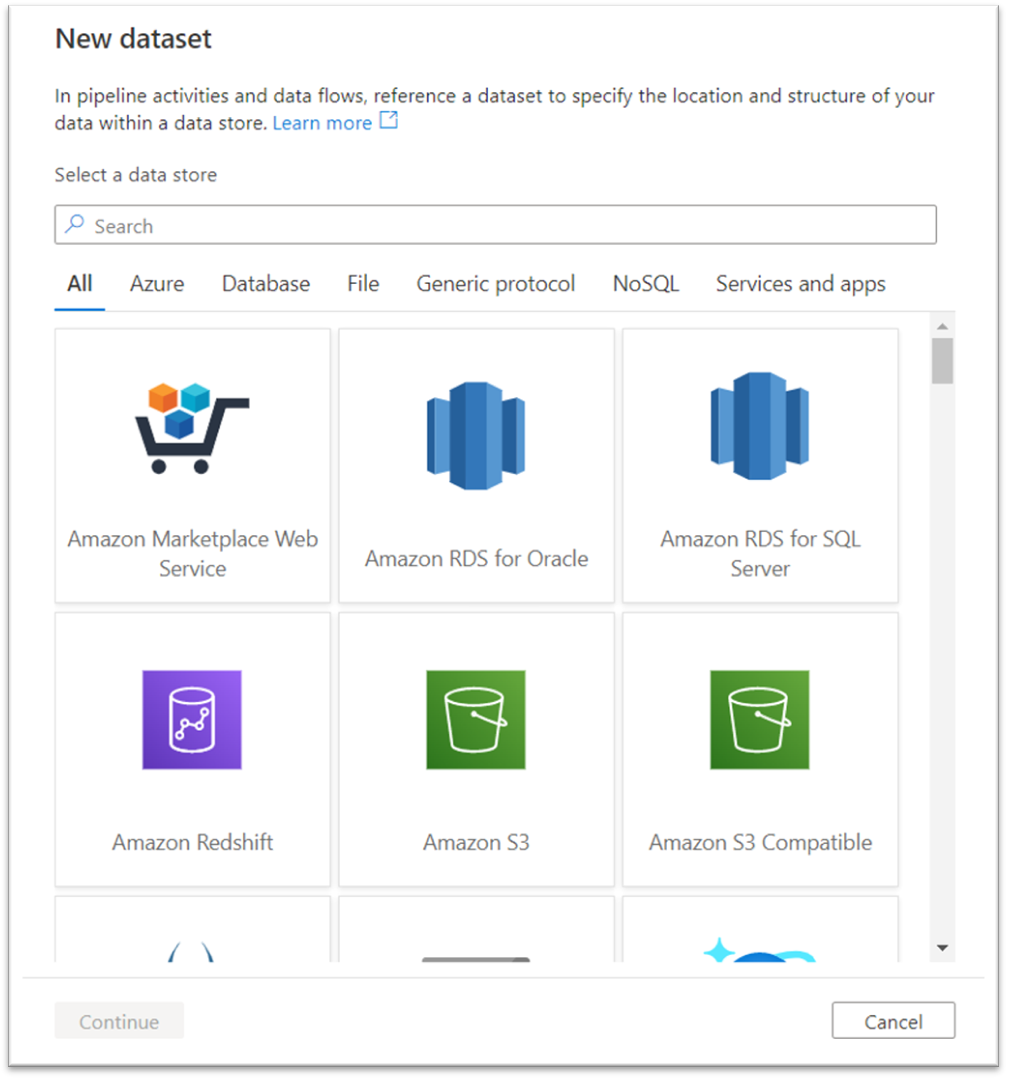

- ADF comes together with 90+ constructed connector at no added price. This implies, ADF permit us to carry out information migration throughout a number of platforms. Please discover under snap for standard platforms with ADF connector.

Reference: https://docs.microsoft.com/en-us/azure/data-factory/connector-overview

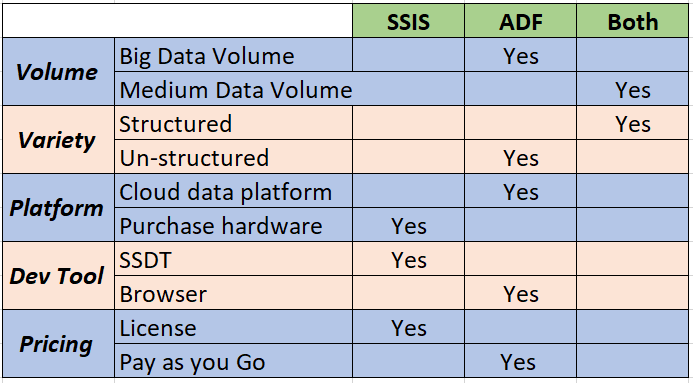

- Given under is fast comparability between SSIS and ADF which describes the advantages to make use of ADF over SSIS on this cloud world.

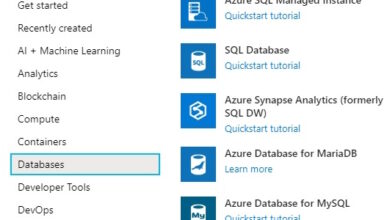

Overview of Azure Information manufacturing unit

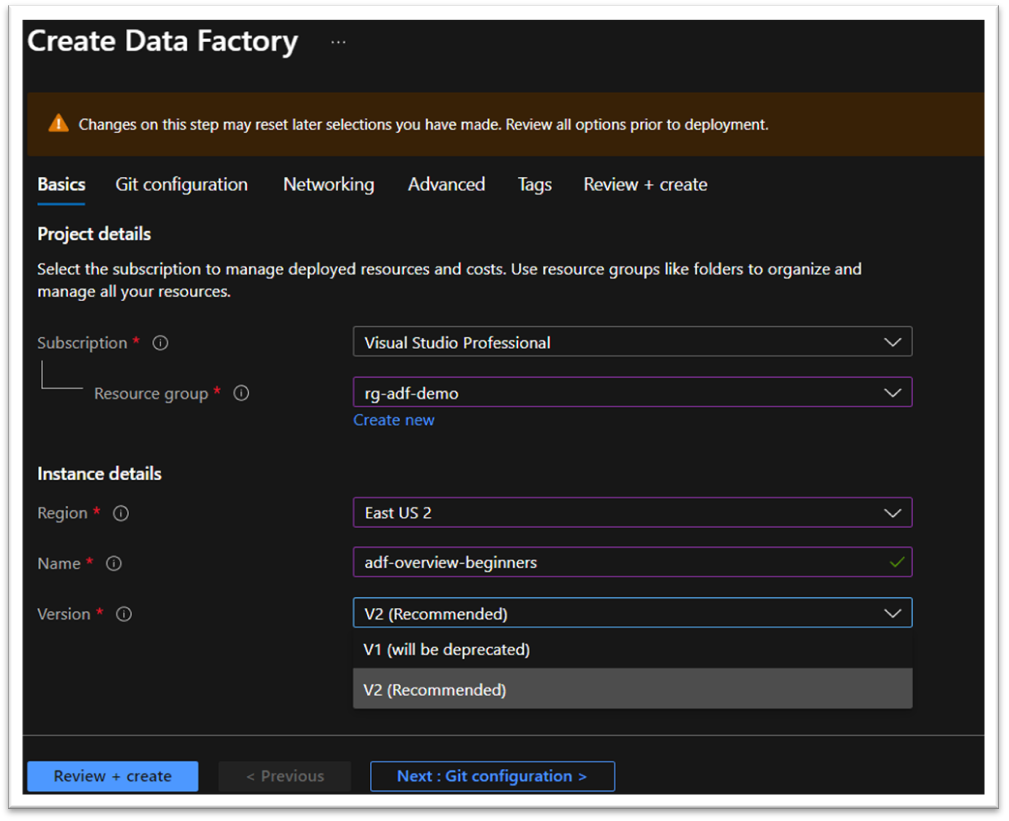

As we will see under, ADF present model is V2 which is newest and really helpful model as V1 model goes to be deprecated very quickly.

Yow will discover extra particulars about V2 benefits over V1 on Microsoft web site.

Reference: https://docs.microsoft.com/en-us/azure/data-factory/compare-versions

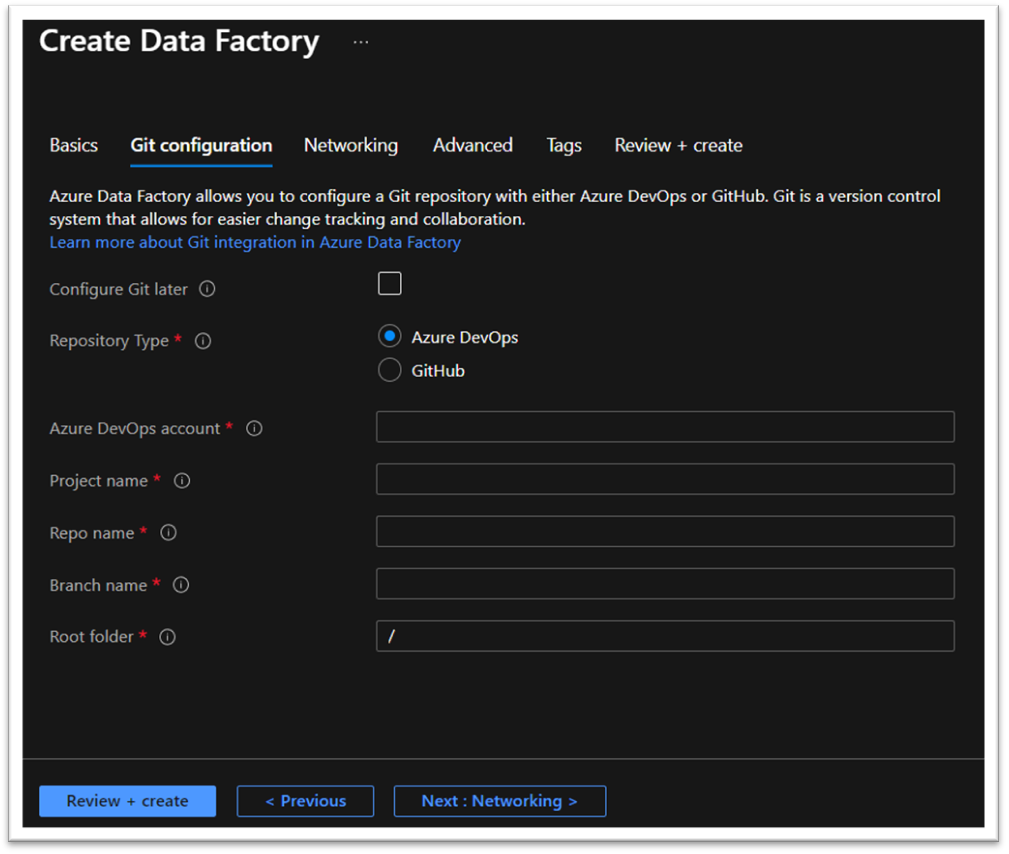

ADF additionally assist model controlling together with CI/CD pipeline deployment with Azure Dev-Ops as nicely Github. This makes deployment and migration simpler from one setting to subsequent setting.

Networking is most necessary half once we take care of Information over cloud. So, ADF comes with Managed digital community in addition to it has functionality to utilize Non-public endpoint for self-hosted integration runtime.

The Integration Runtime (IR) is the compute infrastructure utilized by Azure Information Manufacturing unit and Azure Synapse pipelines to offer information integration capabilities throughout totally different community environments:

Creating an Azure IR inside managed Digital Community ensures that information integration course of is remoted and safe.

It doesn’t require deep Azure networking information to do information integrations securely. As an alternative getting began with safe ETL is far simplified for information engineers.

Managed Digital Community together with Managed non-public endpoints protects towards information exfiltration.

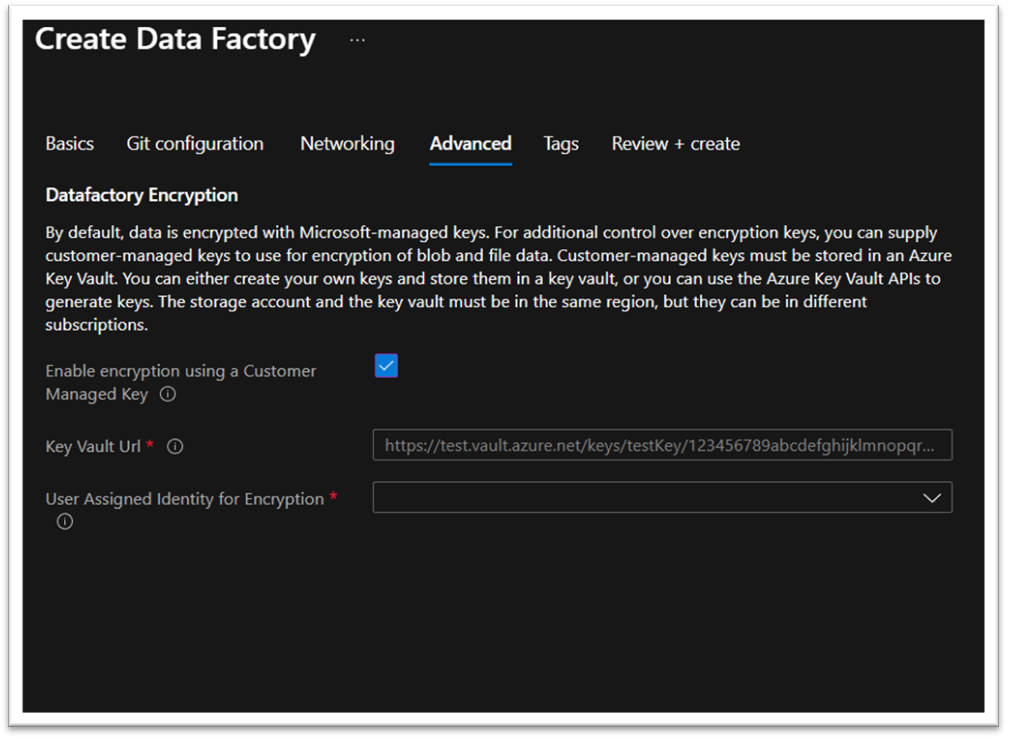

As we will see description under, by default information is encrypted with Microsoft managed keys however along with this managed key, ADF permit us to take management over encryption by defining personal Buyer managed key and that key may be accessed via Key Vault.

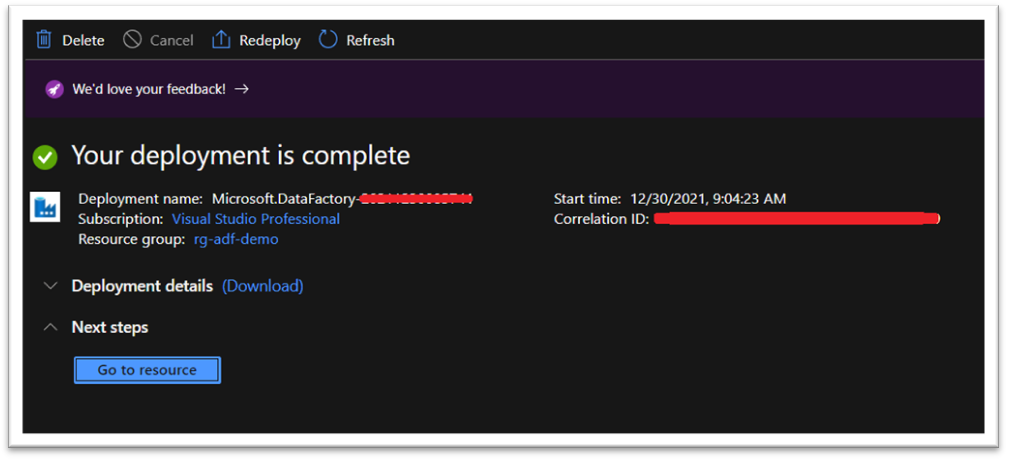

With above set of configurations our first ADF is able to be deployed.

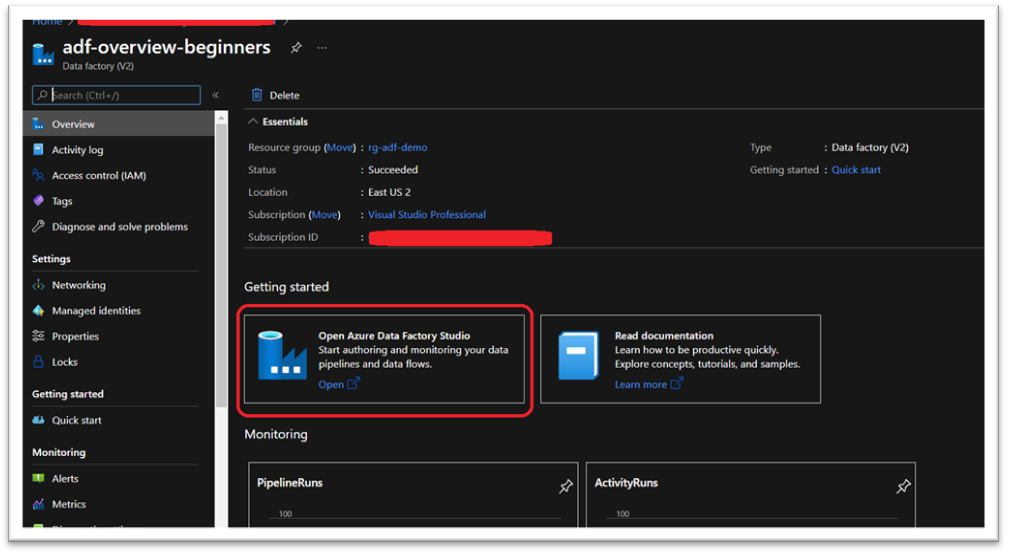

As soon as useful resource is deployed, we will see all properties in under display screen. Nonetheless, pipeline improvement occurs on ‘https://adf.azure.com/’ portal and to navigate to portal merely click on on ‘Open Azure Information Manufacturing unit Portal’ field under ‘Getting Began’ title.

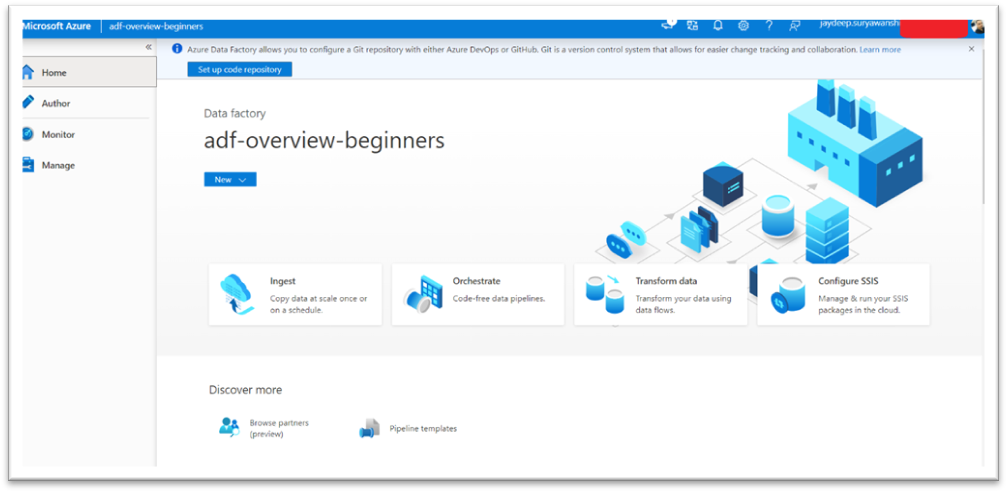

That is how ‘Information Manufacturing unit Studio’ appears like.

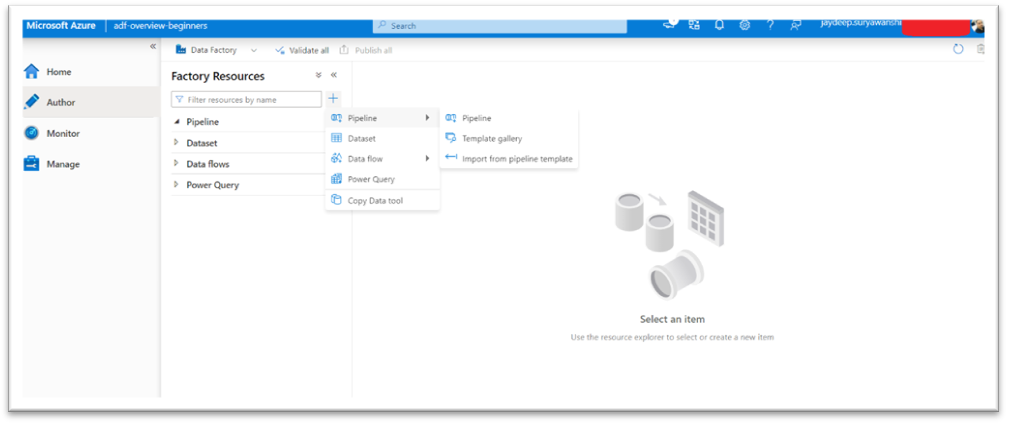

To create pipeline click on on Creator and it gives you additional choices to create number of packages.

First step in information manufacturing unit is to create datasets which might be required in pipeline as supply and vacation spot of information.

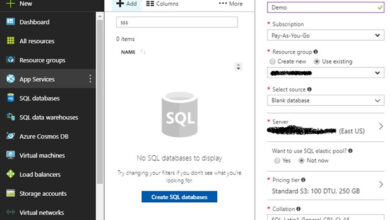

So, to start out with Dataset configuration, I’ve created two azure SQL databases to maintain this demo easy.

Additionally, I’ve created desk worker and added few entries into this desk of supply database and in vacation spot database created empty desk.

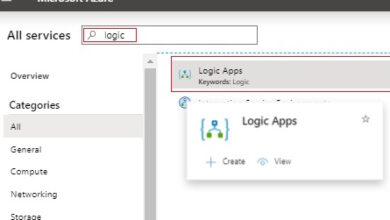

As I discussed above, please discover under variety of connectors which we will configure emigrate or rework enormous quantity of information.

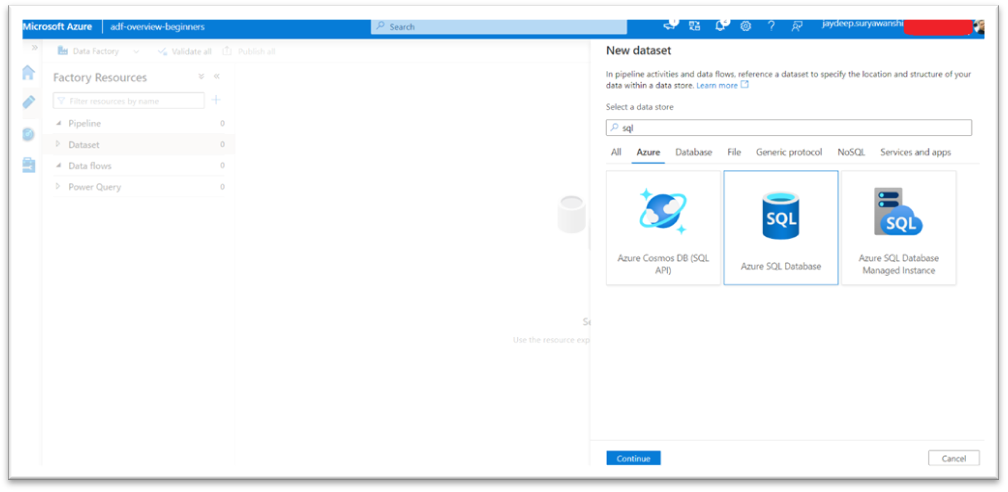

Let’s create two datasets i.e., supply and vacation spot.

In Dataset, we have to configure linked service as under.

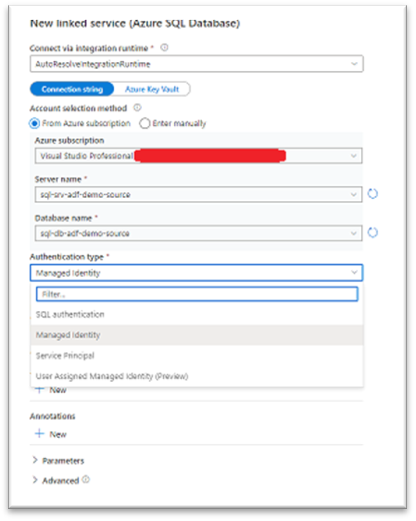

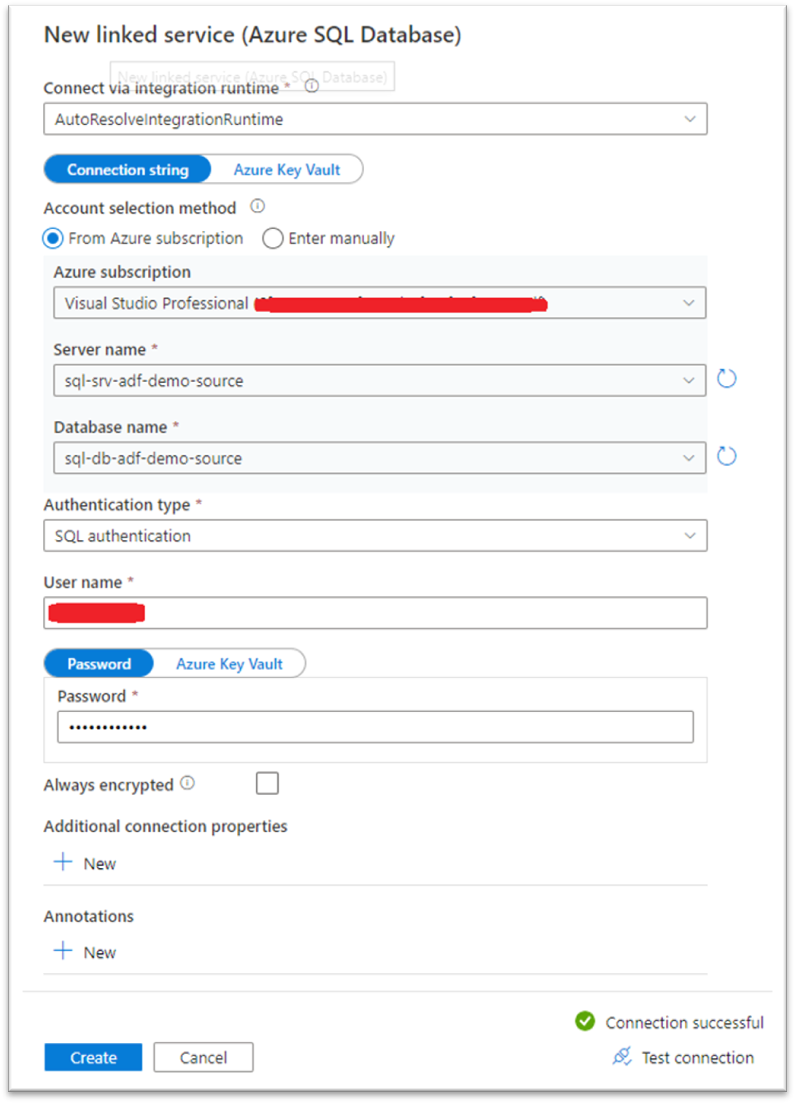

On linked service configuration, choose subscription, server identify and database. We will additionally make use of Key vault right here to attach with SQL occasion.

We will configure SQL connectivity with some ways like SQL authentication, Managed Id Service Principal and Person assigned managed id.

On this demo, we’re utilizing SQL authentication.

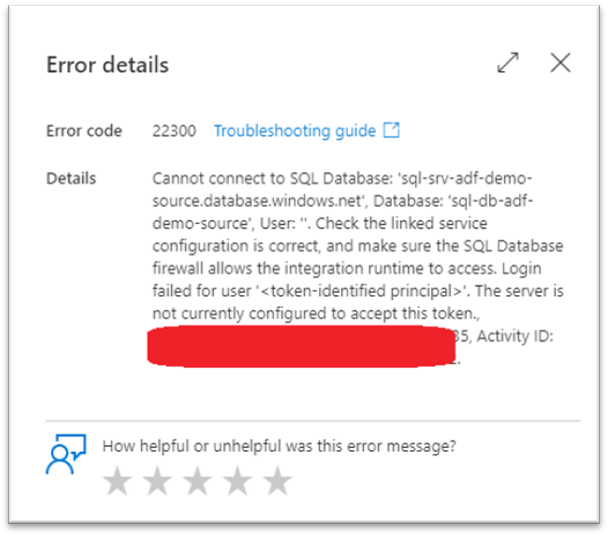

Put up configuration, you may confirm SQL connectivity by hitting ‘Take a look at Connection’ hyperlink at backside. In case, for those who get under talked about error then make it possible for ‘Information manufacturing unit’ IP is added into firewall rule of azure SQL server.

With above steps we’re performed with Supply dataset configuration. Similar steps, we have to comply with for Vacation spot dataset configuration.

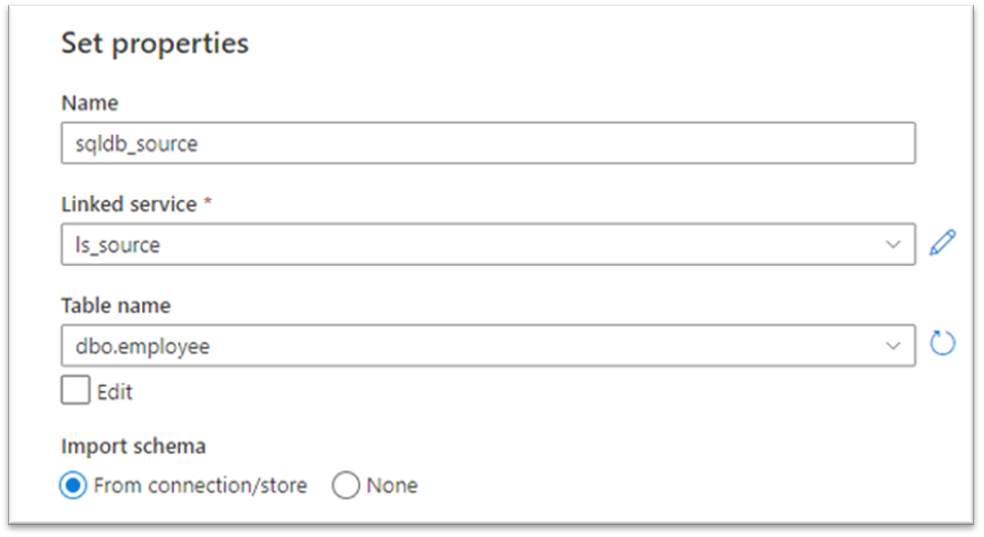

Supply Dataset:

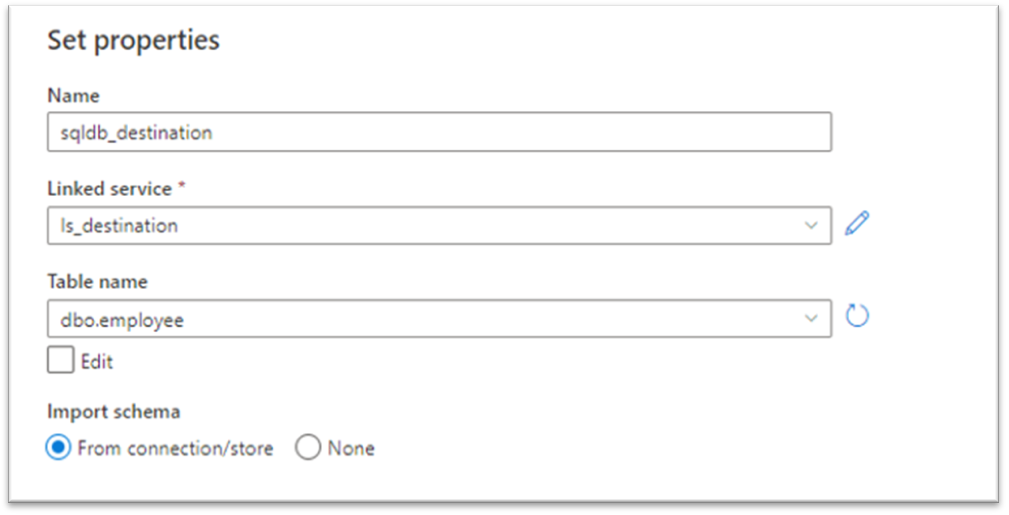

Vacation spot Dataset:

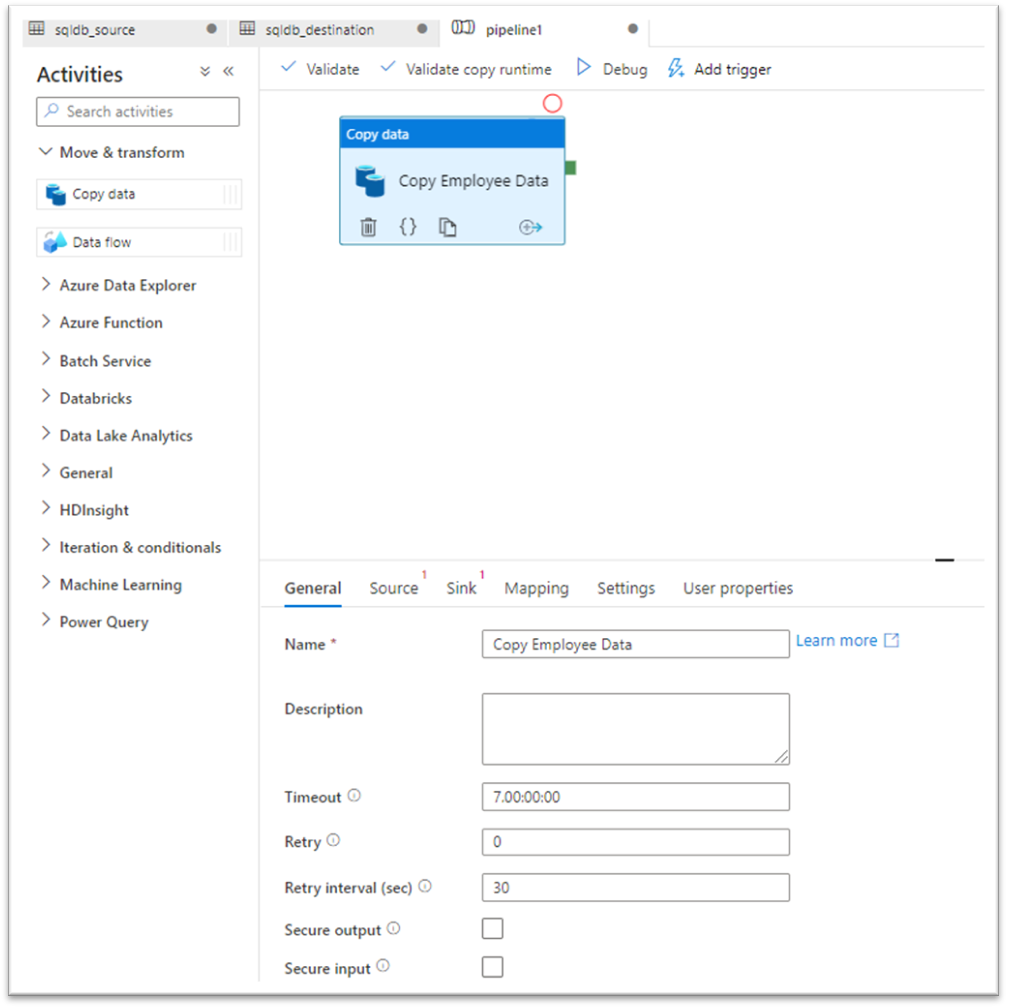

Now, we’re good to start out with information manufacturing unit pipeline creation. We will likely be utilizing ‘Copy Information’ management to switch information from supply SQL database to vacation spot SQL database.

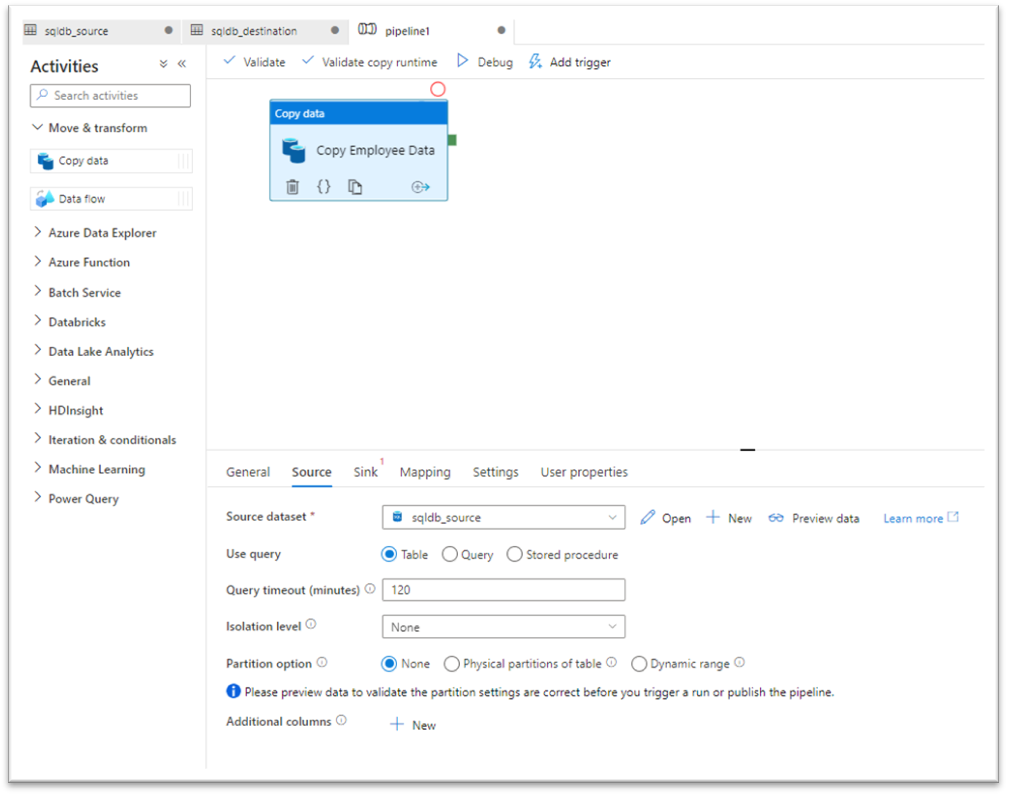

Configure created supply dataset in Supply tab as under.

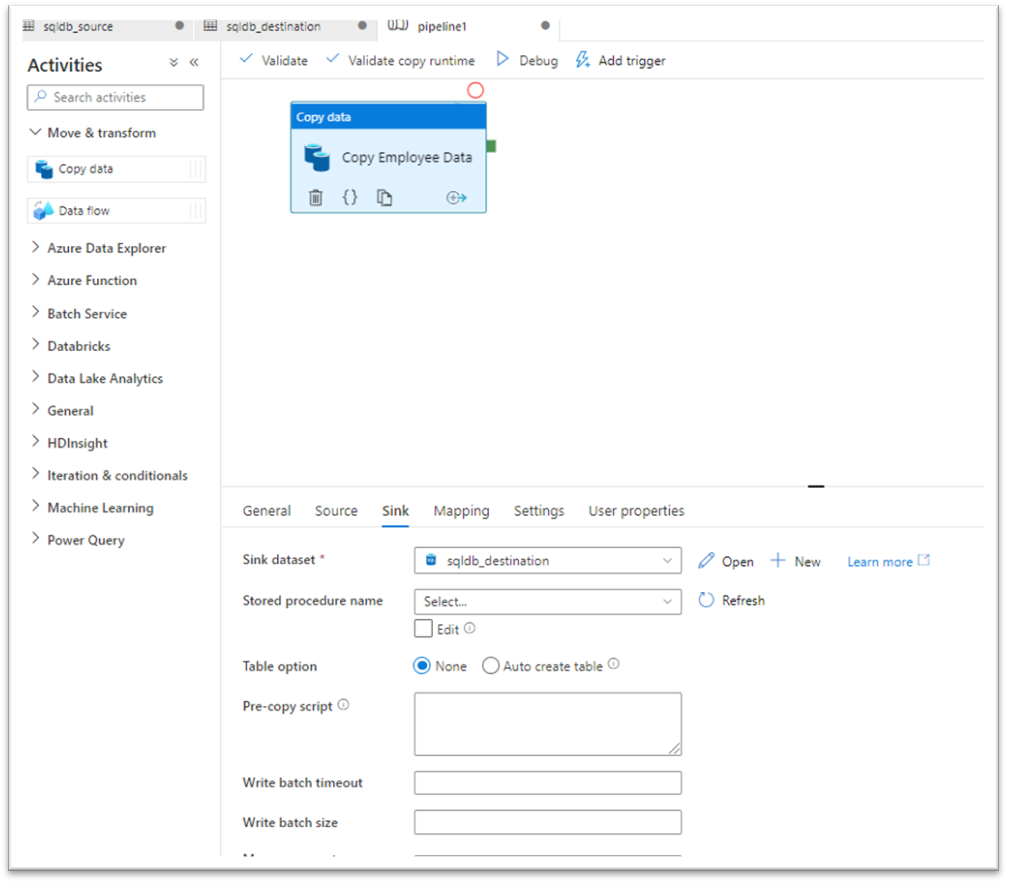

Configure created vacation spot dataset in Sink tab as under.

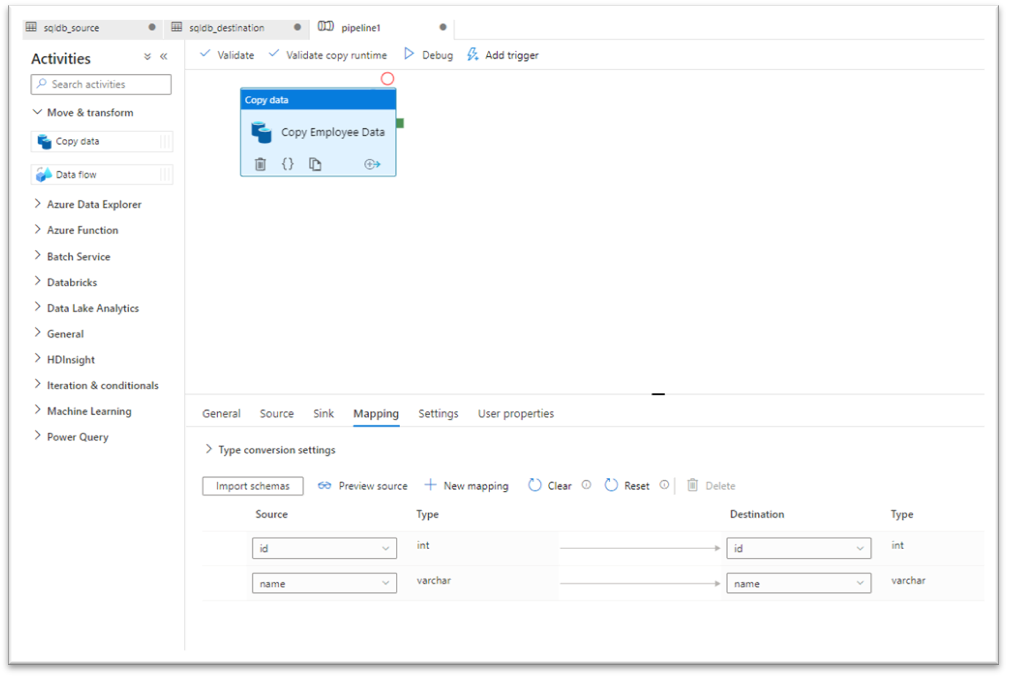

Confirm mapping and make adjustments as wanted below Mapping Tab.

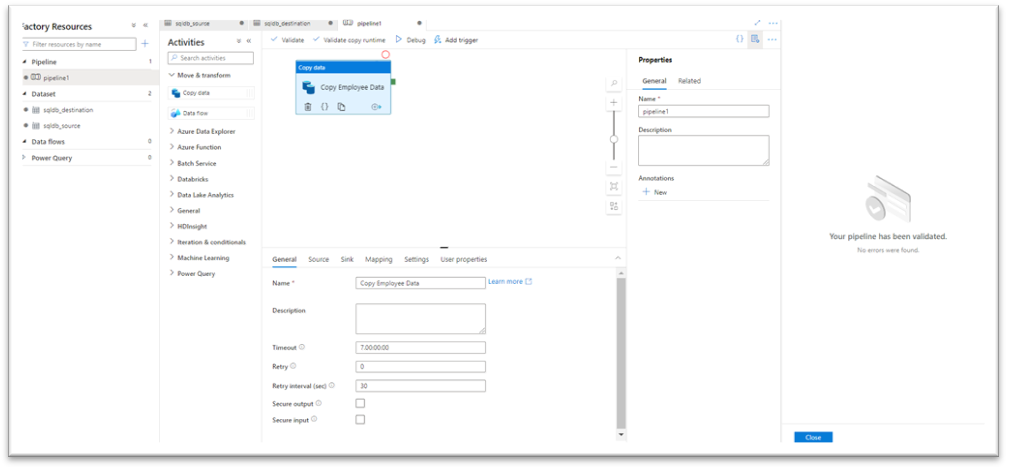

As soon as Supply, Sink and Mapping configuration is finished then click on on ‘Validate’ to confirm that there isn’t any error.

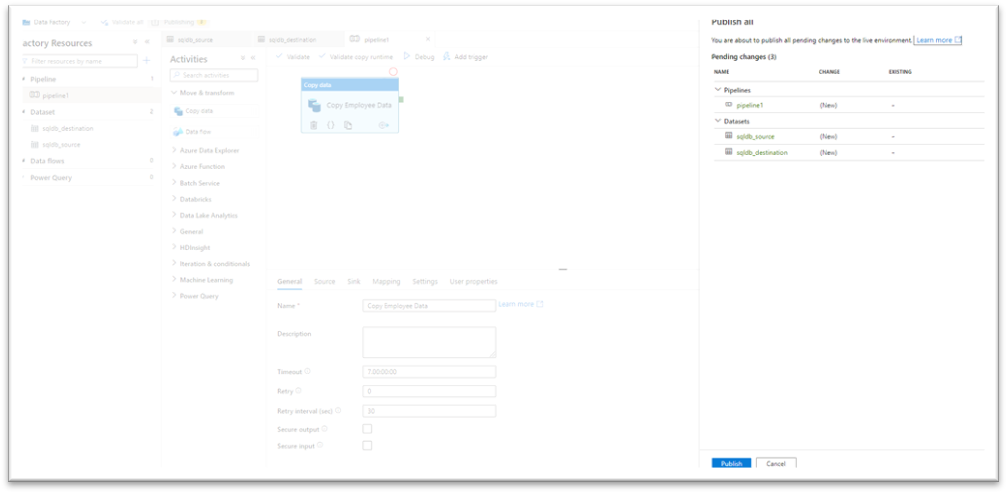

Now we’re able to publish our change to server.

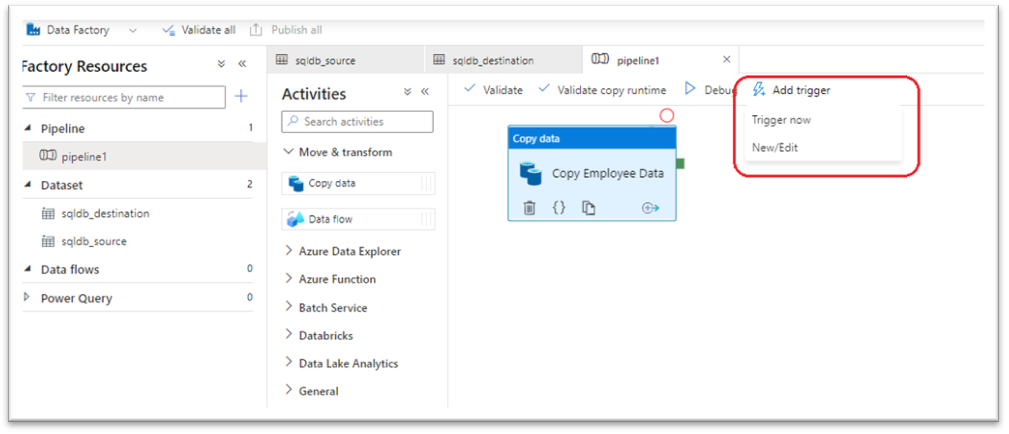

After efficiently publishing change we will set off pipeline. There are two solution to execute pipeline, both we will schedule for future or we will set off now as under.

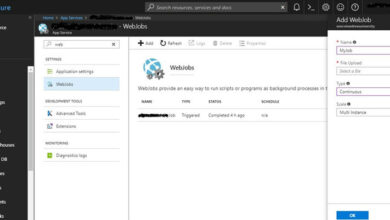

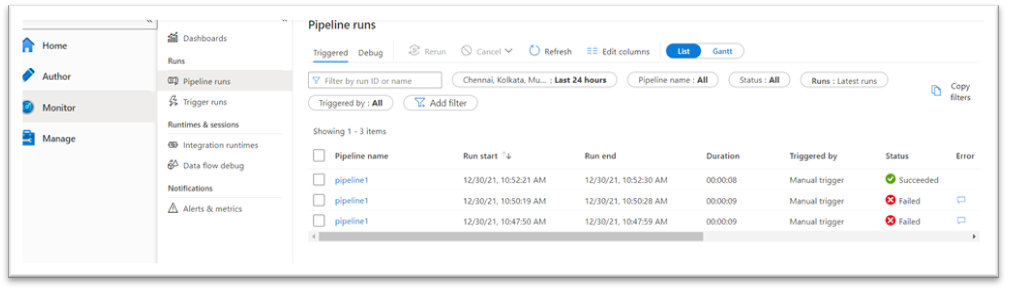

So, we triggered pipeline and we will see progress/standing of pipeline in Monitor tab.

Monitor (From left panel) -> Pipeline Run

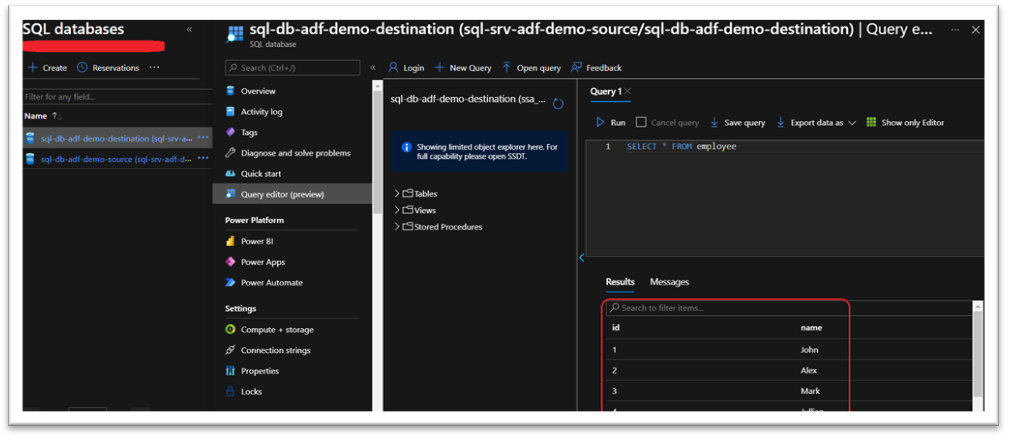

So, pipeline executed efficiently and we will see worker information has been migrated to vacation spot SQL database as under ?.

Thanks for studying this text. Please share your ideas and feedback.

Glad Coding ?.