Software Scaling In Azure Kubernetes Service

What we’ll cowl,

- Overview of Software Scaling in Azure Kubernetes Service

- Implement the Scaling of Guestbook Software

- Scale Frontend elements of Guestbook Software

Conditions

Overview of Software Scaling in Azure Kubernetes Service

If you find yourself working an utility, it requires scaling and upgrading which is a really essential stage for an utility. The applying consists of frontend and backend; scaling is required to deal with further hundreds inside the utility. Upgrading is required to maintain your utility updated and to have the ability to introduce new performance. The principle benefit of utilizing cloud-native purposes is the Scaling which is in demand. It optimizes the applying, for instance, as I discussed above the applying has two elements, one is front-end and one other one is Backend. If the frontend elements encounter heavy hundreds you may solely scale the frontend alone and your backend situations are the identical. You possibly can enhance or scale back the quantity/measurement of Digital Machines (VM) required relying in your workload and peak demand hours.

There are two scale dimensions for purposes working on prime of Azure Kubernetes Service. The primary scale dimension is the variety of Pods a deployment has, whereas the second scale dimension in Azure Kubernetes Service AKS is the variety of nodes within the cluster.

By including further Pods to a deployment, often known as scaling out, you add further compute energy to the deployed utility. You possibly can both scale out your purposes manually or have Kubernetes care for this mechanically through the Horizontal Pod Autoscaler (HPA). The HPA will watch metrics akin to CPU to find out whether or not Pods have to be added to your deployment.

The second scale dimension in AKS is the variety of nodes within the cluster. The variety of nodes in a cluster defines how a lot CPU and reminiscence can be found for all of the purposes working on that cluster. You possibly can scale your cluster both manually by altering the variety of nodes, or you need to use the cluster autoscaler to mechanically scale out your cluster. The cluster autoscaler will watch the cluster for Pods that can not be scheduled resulting from useful resource constraints. If Pods can’t be scheduled, it is going to add nodes to the cluster to make sure that your purposes can run.

On this article, you’ll be taught how one can scale your utility. First, you’ll scale your utility manually, and within the second half, you’ll scale your utility mechanically.

Implementing the scaling of Guestbook Software

To show handbook scaling, let’s use the guestbook instance that we used within the earlier articles. Observe these steps to discover ways to implement handbook scaling,

- Open your pleasant Cloud Shell, as highlighted

- Clone the GitHub repository following command all of the recordsdata I’ve positioned there,

- git clone https:

- cd Azure-K8s/Scale-Improve/

- Set up the guestbook by working the kubectl create command within the Azure command line,

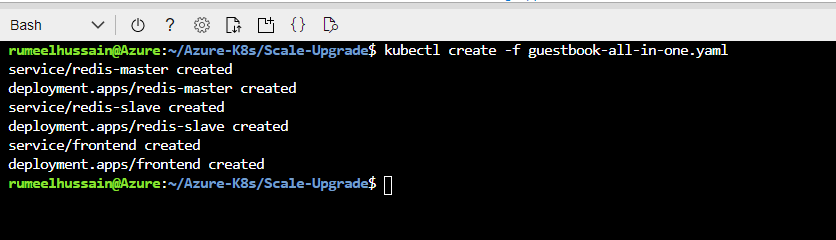

- kubectl create -f guestbook-all-in-one.yaml

- After you may have entered the previous command, you need to see one thing related, as proven within the screenshot:

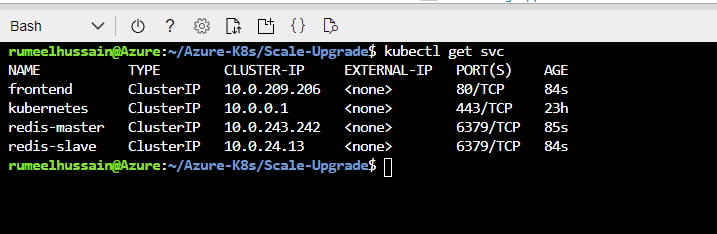

- Proper now, not one of the providers are publicly accessible. We are able to confirm this by working the next command,

- kubectl get svc

- Within the talked about screenshot not one of the providers have an exterior IP,

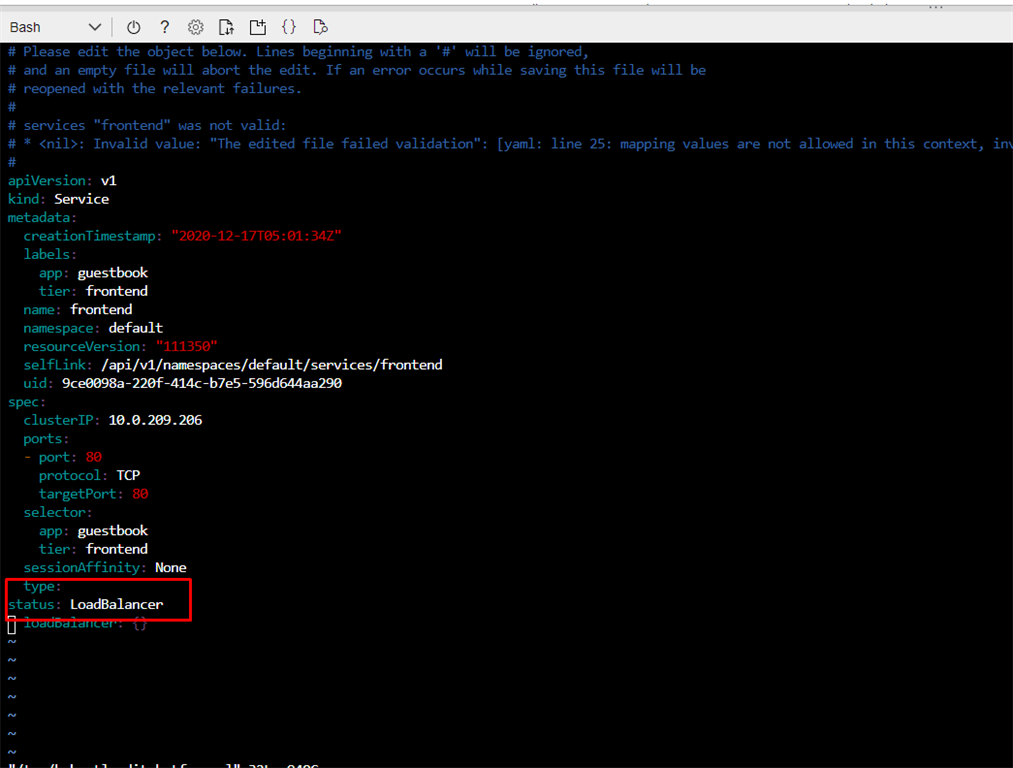

- To check out our utility, we’ll expose it publicly. For this, we’ll introduce a brand new command that may assist you to edit the service in Kubernetes with out having to vary the file in your file system. To start out the edit, execute the next command,

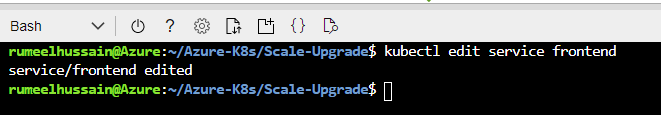

- kubectl edit service frontend

- This may open a vi atmosphere. Navigate to the road that now says kind: ClusterIP (line 27) and adjustments that to kind: LoadBalancer, as proven within the talked about screenshot under. To make that change, hit the I button, kind your adjustments, hit the Esc button, kind :wq! , after which hit Enter to save lots of the adjustments:

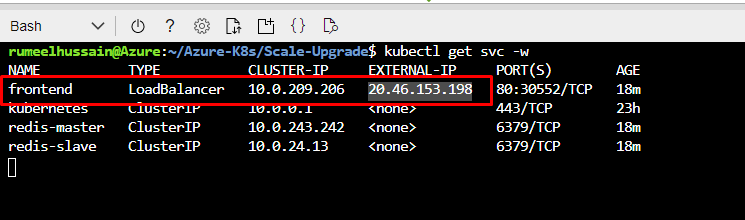

- As soon as the adjustments are saved, you may watch the service object till the general public IP turns into obtainable. To do that, kind the next,

- kubectl get svc -w

- It is going to take a few minutes to point out you the up to date IP. When you see the right public IP, you may exit the watch command by hitting Ctrl + C (command + C on Mac):

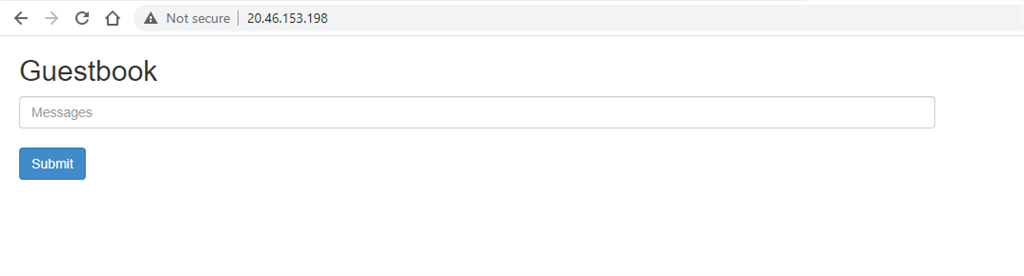

- Kind the IP tackle from the previous output into your browser navigation bar as follows: http://

<EXTERNAL-IP>/. The results of that is proven within the talked about screenshot.

The acquainted guestbook pattern ought to be seen. This exhibits that you’ve efficiently publicly accessed the guestbook. Now that you’ve the guestbook utility deployed, you can begin scaling the totally different elements of the applying.

Scale Frontend Elements of guestbook Software

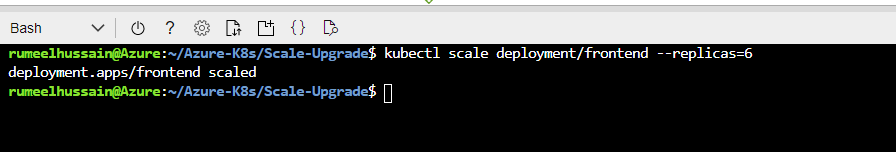

Kubernetes offers us the flexibility to scale every part of an utility dynamically as I mentioned above that we will scale the frontend and backend of the applying individually — that is the great thing about Kubernetes. I’ll present you how you can scale the entrance finish of the guestbook utility. This may trigger Kubernetes so as to add further Pods to the deployment,

- kubectl scale deployment/frontend –replicas=6

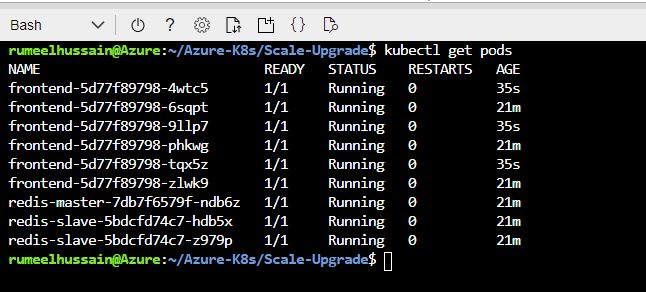

You possibly can set the variety of replicas you need, and Kubernetes takes care of the remainder. You possibly can even scale it all the way down to zero (one of many methods used to reload the configuration when the applying would not help the dynamic reload of configuration). To confirm that the general scaling labored accurately, you need to use the next command:

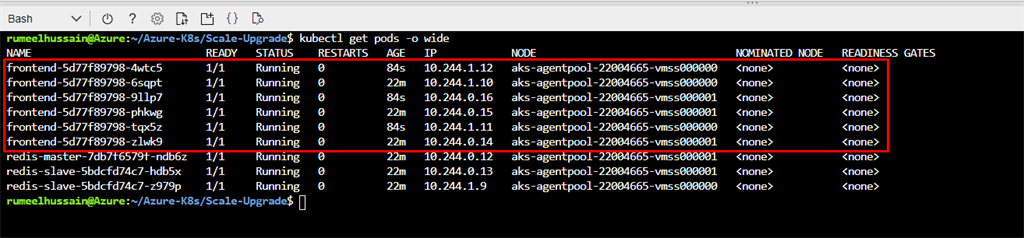

As you may see, the front-end service scaled to 6 Pods. Kubernetes additionally unfold these Pods throughout a number of nodes within the cluster. You possibly can see the nodes that that is working on with the next command:

- kubectl get pods -o huge

This may generate an output as follows,

On this half, you may have seen how simple it’s to scale Pods with Kubernetes. This functionality gives a really highly effective instrument so that you can not solely dynamically modify your utility elements but in addition present resilient purposes with failover capabilities enabled by working a number of situations of elements on the similar time. Nonetheless, you will not at all times need to manually scale your utility. Let’s transfer to the second half wherein you’ll be taught how one can mechanically scale your utility.